Animals have legal rights, so why not AI? It’s not as crazy as it sounds

Gaps in the law mean there may be questions of responsibility if artificial intelligence causes harm, and questions of ownership if it creates something valuable, writes Jacob Turner

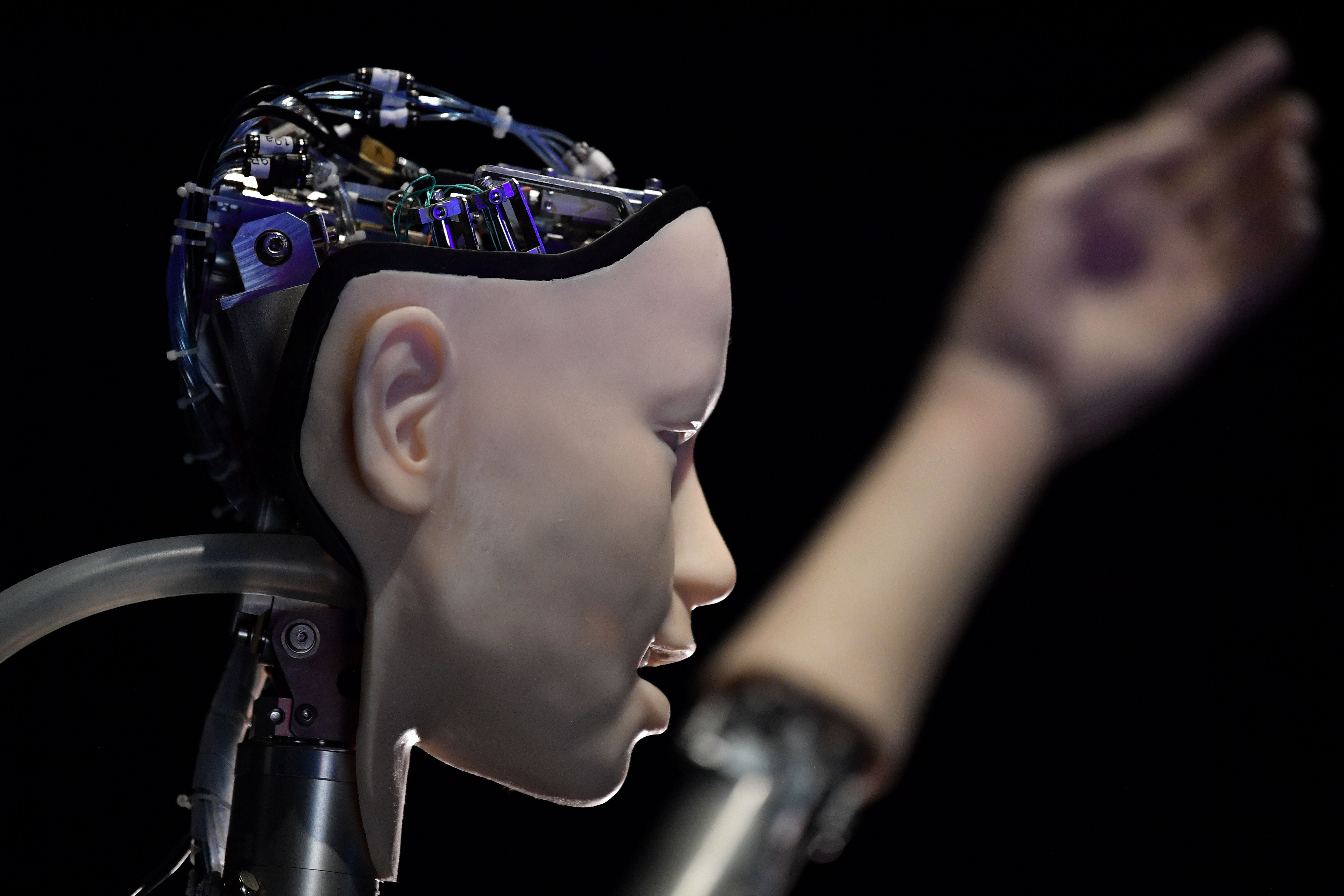

It sounds strange to talk about giving rights to robots. But this week the idea was debated in parliament.

Parliament has always been an innovator when it comes to rights, of course. 200 years ago, an MP proposed a law to protect animals. At first he was laughed out of Westminster. One of his opponents was concerned that people might be punished for “the boiling of lobsters, or the eating of oysters alive.”

Despite being thought an odd idea by many people at the time, the Ill-Treatment of Cattle Act was passed in 1822. It was one of the first pieces of animal rights legislation anywhere in the world. And now the government is going further, planning a new law to recognise Animal Sentience. Members of the House of Lords worried that it could prevent restaurants cooking lobsters – clearly a point of great concern to some politicians, both then and now.

But why might we want to create new rights now for artificial intelligence?

AI is unique because it is the first technology in history which can make decisions for itself. This creates a problem because all existing systems of law govern human decision-making. Our laws generally don’t say what should happen if a non-human makes a decision that leads to real-world consequences.

Gaps in the law mean there may be questions of responsibility if AI causes harm, and questions of ownership if AI creates something valuable, like a new painting or even a new vaccine.

One answer is to require that AI can only ever make recommendations for a human, who must then decide what to do. However, AI is already much better than humans at some things, so imposing a human decision-maker would only introduce errors and take away speed.

Always giving humans the final say on AI decisions could turn out like the laws in the 19th century which required a man to walk in front of every car waving a red flag, meaning that traffic in England could only move at walking pace.

Another solution would be to grant AI a form of legal personality.

We already interact with many artificial legal persons in our daily life. Companies, charities, even nation states only exist when we believe they do.

People often think of corporations as being the tools of capitalism. In fact the first corporations created by statute were instead intended to serve public functions, like the building of hospitals and universities. It was only later that private companies were allowed to be incorporated.

Rather than novelty, it may be better to think of AI legal personality as rediscovering old ideas of the common good.

Some criticise the concept because human programmers could use it cynically to evade responsibility for wrongdoing. But exactly the same criticisms could be made of companies, and no one is suggesting that we abolish companies. Instead the law has developed principles to allow for humans to be held responsible in exceptional cases.

When talking about potential new legal persons, it is important to remember two things. First, there is a long menu of rights to choose from and granting some rights does not mean granting all of them.

To keep up to speed with all the latest opinions and comment sign up to our free weekly Voices newsletter by clicking here

Animals have a right not to be treated with cruelty, but they don’t have a right to hold property. Companies have a right to hold property, but they don’t have rights to vote or marry.

Granting some legal rights to AI doesn’t mean we need to treat them anything like humans or even animals. Instead, AI with legal rights might be more like a company. An AI legal person should still need to have ultimate human owners and directors, just as companies do.

The second point is that with a legal personality, rights come with responsibilities. Creating corporations makes it easier to find an entity to sue if that corporation causes harm. Corporations allow for collective actions and collective responsibility.

We created artificial legal persons hundreds of years ago to serve human aims: to encourage innovation and risk-taking by entrepreneurs, and to protect members of the public by providing a target for legal claims.

Perhaps we should not ask what we can do to protect robot rights. We should ask what protecting robot rights can do for us.

Jacob Turner is a barrister and author of Robot Rules: Regulating Artificial Intelligence.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments