AI is coming to war, regardless of Elon Musk’s well-meaning concern

AI will have the power to escalate, and perhaps even initiate action, before we can stop it

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Elon Musk thinks we should ban Artificially Intelligent weapons before it’s too late and a galaxy of star researchers agree. This week, 116 of them wrote an open letter warning that time was running out. The danger is real – though it’s not the one the public often imagines, of some malign Skynet-like computer developing a mind and agenda of its own.

Yet the chances of any ban are slim to none.

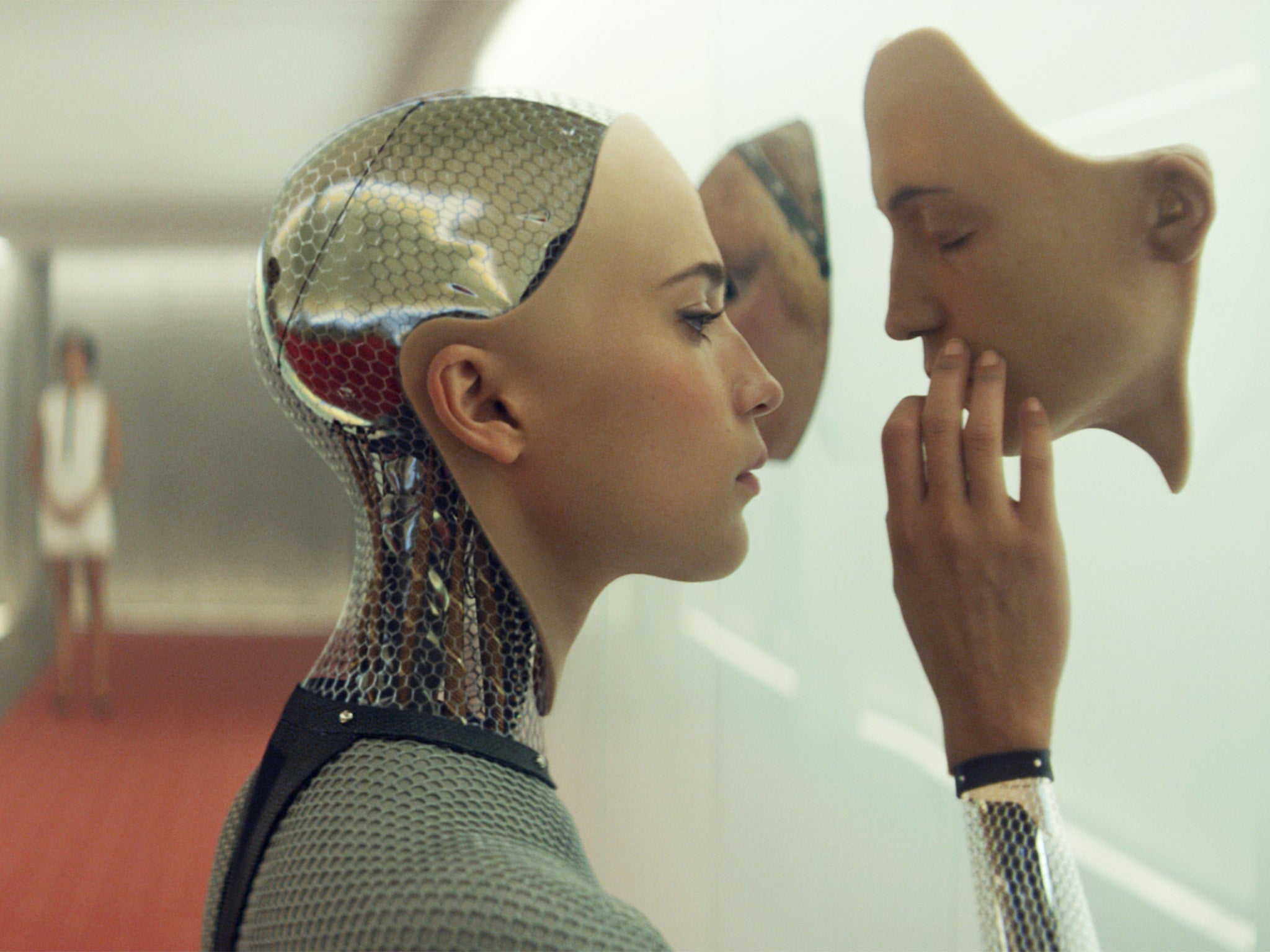

Why would you want to outlaw battlefield AI? The most common fear comes via Hollywood – that an AI might decide to attack us on its own initiative. Think of the Terminator, Ex_Machina, or 2001 A Space Odyssey: it’s a recurrent theme. But the movie version is unlikely. Machines may eventually develop their own motivations, perhaps even their own consciousness, but we are a very long way from that; and if it ever comes, it will look far different to our evolved, biological cognition and motivations.

A second concern is that machines would be indiscriminate killers – perhaps targeting civilians. In one narrow sense, machines are likely to be rather more reliable than humans in shooting targets – they won’t feel the effects of fatigue, or fear, and they are much better at recalling previous examples.

Still, modern AI is extraordinarily poor at understanding context – think Apple’s Siri, but with guns. An AI can understand what you are saying literally – as when Siri effortlessly transcribes your words, no matter how thick your accent. But as Siri’s answers demonstrate, modern AI has very little idea what anything means. On the battlefield, is that person reaching for the gun to surrender, or shoot?

The real danger from AI weapons, however, is that they’ll be rather too good at what they do. Speed is their big advantage over humans on the battlefield. And they work too quickly for us to intervene when we might need to – for example, if our goals change, after instructing our robot army. The Ministry of Defence wants to keep a human “in the loop” – but at great speed, there simply won’t be time: the enemy might not be so queasy, and their robots will be faster and deadly.

So we can agree – AI is dangerous. But we disagree about regulation. Battlefield AI is almost impossible to regulate.

For one thing, there’s a powerful “security dilemma” here. A tiny qualitative advantage in AI will be utterly decisive in battle, so there’s a huge incentive to cheat. For another, it will be hard to agree what constitutes AI – there are many different approaches and definitions, and the state of art is shifting rapidly. Indeed, AI is already here on the battlefield, and has been for years – as with the American Aegis air defence system.

Lastly, AI is hard to regulate practically. The concerned AI scientists remind me of the Pugwash anti-nuclear committee of the 1950s. But with AI, there is no tell-tale large infrastructure to monitor, like uranium enrichment plants, or test facilities. Much of the necessary technology is ‘dual use’ – AI’s value is as a decision-making technology, not a weapon per se. The same brilliant decision-making algorithms could save lives in hospitals and on the roads, yet be applied to taking them in battle.

AI is coming to war, regardless of Musk’s well-meaning concern. Already an AI can effortlessly outperform a skilled human fighter pilot in simulators. So, are we doomed without regulation?

Not inevitably, but these will be dangerous and unsettled times. This isn’t science fiction: the AI that is here already is dangerous. Soon, it will change military power rapidly – outmoding non-AI armies, and undermining existing defences, like our nuclear deterrent. It may encourage adventurism by states who no longer need fear sending troops into harms way. Most dangerous in my view – the AI will have the power to escalate, and perhaps even initiate action, before we can stop it. Without a ban, we’ll need new ways to defend against intelligent machines. And that’s inevitably going to require even more AI.

Dr Kenneth Payne is a Senior Lecturer in the School of Security Studies at King’s College London. His next book, Strategy Evolves: From Apes to Artificial Intelligence is out in the New Year.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments