Killer robots are coming to San Francisco police. That should alarm everyone

The ACLU is alarmed about this development, saying on Twitter that ‘robot police dogs open a wide range of nightmare scenarios, from being weaponized to being made autonomous’

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.On Tuesday, San Francisco’s Board of Supervisors approved the use of police robotics that can be used to kill. The robots — which, according to a spokesperson, could be used to “incapacitate, or disorient violent, armed, or dangerous suspects who pose a risk of loss of life” and could have explosives attached to them during missions — are intended for emergency situations.

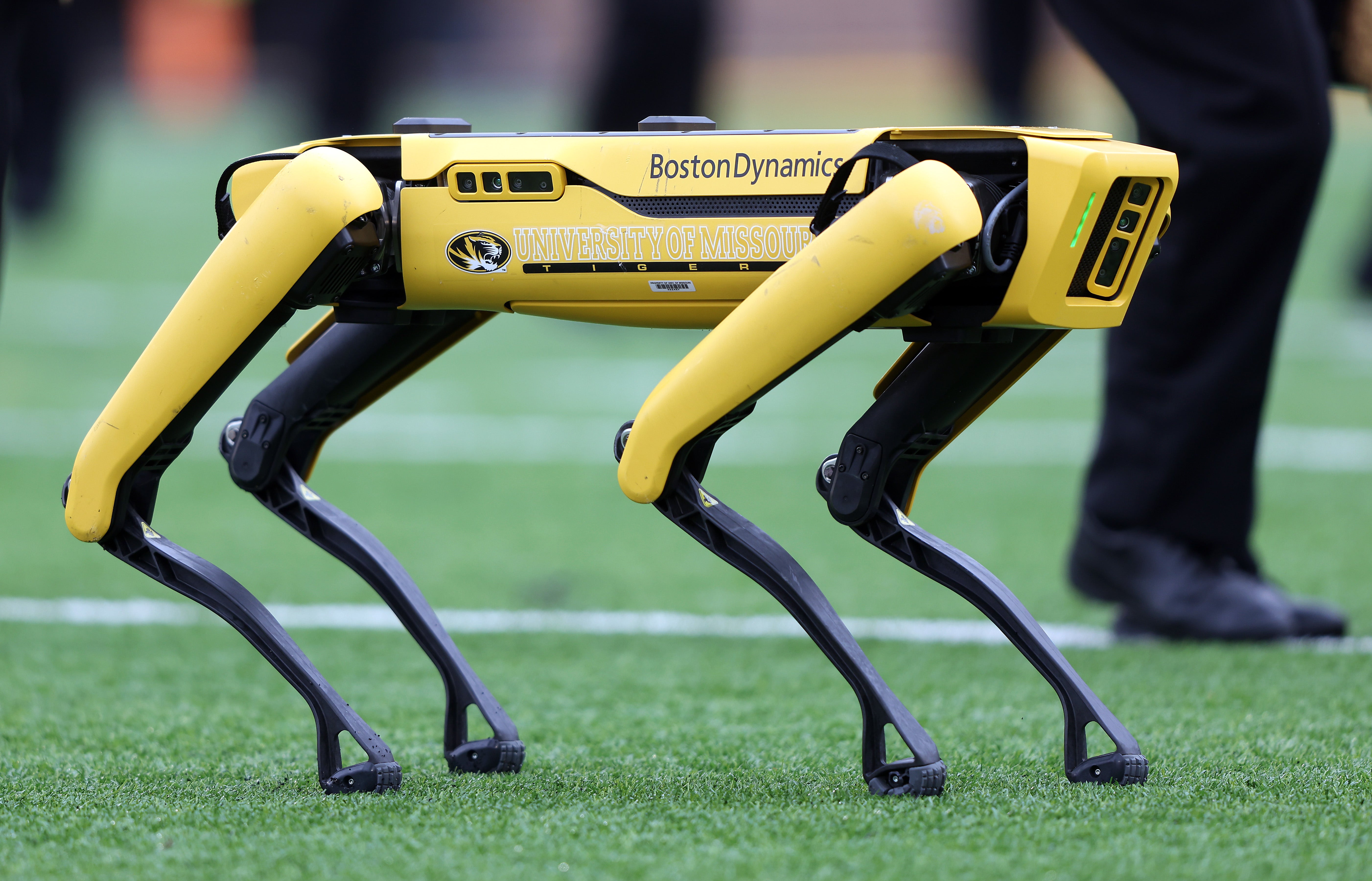

San Francisco police are not the first to flirt with such a techno-dystopian future. In 2020, the NYPD began using a robot canine — the Spot model from Boston Dynamics — in certain situations. The four-legged robot was used sparingly, once in a hostage situation and at least once during an incident at public housing. But the decision was later reversed after a fierce backlash from New York residents. Despite this, the Los Angeles Police Department is now poised to take on Spot — which costs about $300,000 to acquire — after positive feedback at a Police Commissioner meeting.

The real-life militarization of robotics and tech is slowly becoming an object of public scrutiny, years after eye-watering amounts of money was poured into its development. It has brought forward a litany of ethical objections from the public and from within the teams whose technology is being requested to be utilized.

The Hollywood robotics inventor in the film Short Circuit famously described how he had never intended to build a weapon, but instead developed a tool with a very different aim. “Originally I had non-military purposes in mind. I designed it as a marital aid,” he says in the movie. But the military in the film were quick to recognize the benefits of such technology: “It’s the ultimate soldier. Obeys orders and never asks questions.” That (admittedly fictional) exchange underlines how technology can easily be weaponized, even if the developers don’t intend or even can’t comprehend how it might be done.

In recent years, a number of robotics designers and engineers have come forward saying that they do not support their projects being weaponized. In an open letter “to the robots community and our communities,” Boston Dynamics wrote: “We pledge that we will not weaponize our advanced-mobility general-purpose robots or the software we develop that enables advanced robotics and we will not support others to do so. When possible, we will carefully review our customers’ intended applications to avoid potential weaponization… We understand that our commitment alone is not enough to fully address these risks, and therefore we call on policymakers to work with us to promote safe use of these robots and to prohibit their misuse. We also call on every organization, developer, researcher, and user in the robotics community to make similar pledges not to build, authorize, support, or enable the attachment of weaponry to such robots.” However, the use of Spot by police departments — and the latest announcement from San Francisco — shows there are gray areas that such developers can’t foresee and can’t necessarily prevent.

The simple fact is that our police are already responsible for so many acts of violence across the country. Over 1,000 individuals are reported to have lost their lives to police officials across the country in 2022. Fatal police shootings disproportionately affect Black and Hispanic people. Homeless individuals have died at the hands of the police — and specifically the LAPD — without the police facing consequences. This is just what is known to the public. Due to the secrecy of police commissions across the country, the true picture is likely uglier than what we know. How can we expect a lethal robot tool to be used in the hands of such police officers — and what will be a victim or their family’s recourse if they are killed by such weaponized technology?

While there are legal avenues to pursue wrongful actions by individual, human police officers, even these are extremely imperfect. Unions and commissions routinely shield law enforcers from public scrutiny. And in cases where police officers are held to account — such as in the case of Culver City Police Sargent Eden Robertson, who was deemed to have publicly doxxed a resident on a Facebook messenger board — it’s not uncommon for the officer’s actions to be overlooked later in their career, or for them to simply move to a different city or area and join another police force unsanctioned. Robertson, for example, who was allegedly “disciplined” for her actions, was not only promoted from sergeant to lieutenant after publicly doxxing a resident, but also was given a Special Recognition Award.

Adding robotics gives police an extra layer of protection against accountability. How can one prove a wrongful death suit against a robot? How many robot operators in law enforcement will hide behind the idea of malfunction, or of not being able to fully understand the situation on the ground? The words of the ACLU are pertinent here: “Robot police dogs open a wide range of nightmare scenarios, from being weaponized to being made autonomous. We can’t let this dangerous technology onto our streets unchecked.”

I do not want to live in a Hollywood realityWargames scenario where people use technology like a game while also participating in horrific violations against human rights. There is no way that we should even think about introducing such weaponized technology before we address the systemic issues that make it so dangerous. Taking away qualified immunity laws which protect police officers from police accountability and unraveling the unnecessary secrecy of police departments would be a good start. Instead, it seems like we’re headed in the opposite direction.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments