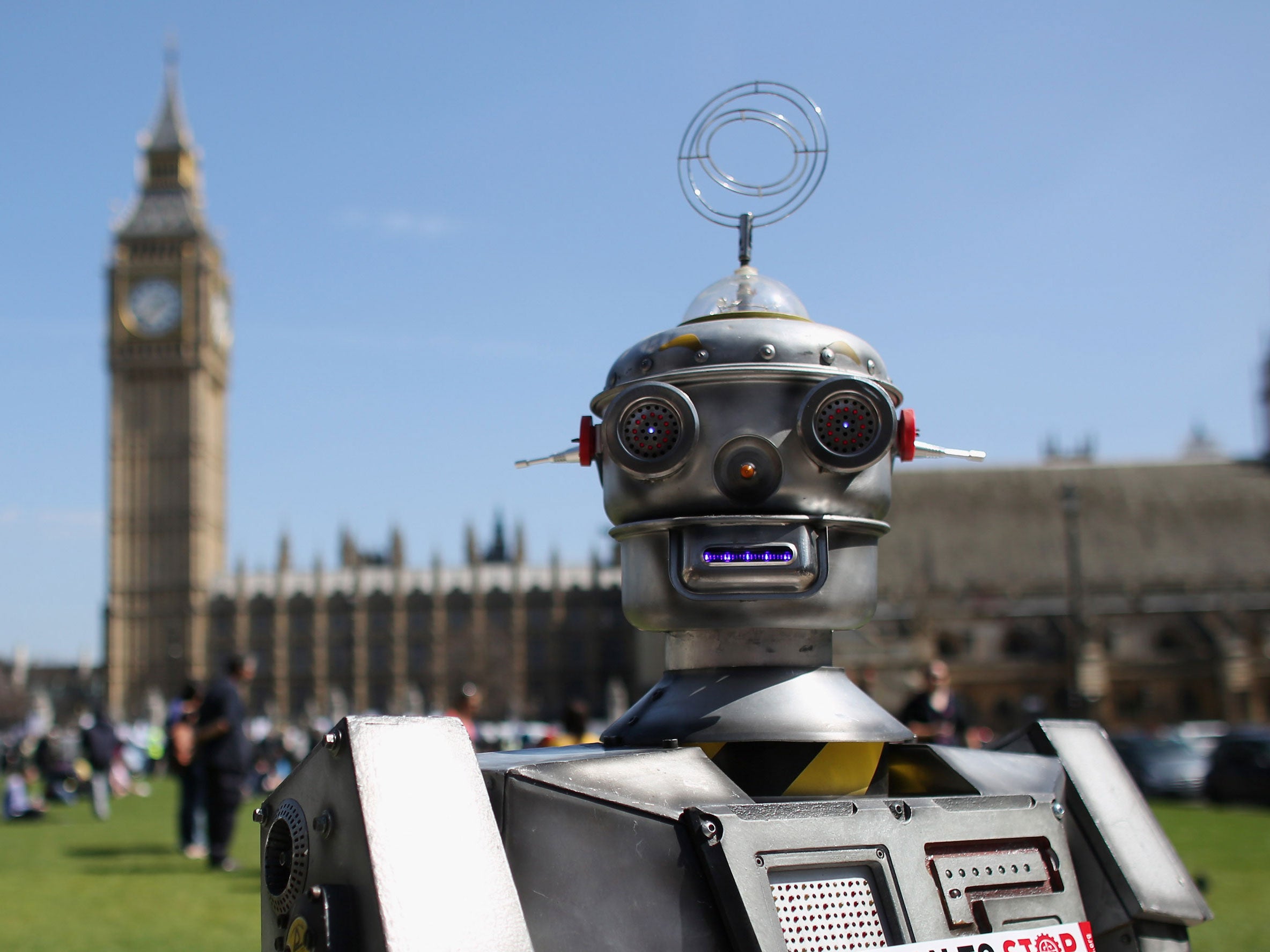

Delegates at Davos held a meeting about the dangers of autonomous 'killer robots'

Delegates to the World Economic Forum meeting in Davos were reportedly very interested in the 'killer robots' issue

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.The issue of 'killer robots' one day posing a threat to humans has been discussed at the annual World Economic Forum meeting in Davos, Switzerland.

The discussion took place on 21 January during a panel organised by the Campaign to Stop Killer Robots (CSKR) and Time magazine, which asked the question: "What if robots go to war?"

Participants in the discussion included former UN disarmament chief Angela Kane, BAE Systems chair Sir Roger Carr, artificial intelligence (AI) expert Stuart Russell and robot ethics expert Alan Winfield.

Despite coming from very different sectors, the participants agreed on one thing during their hour-long discussion - autonomous weapons (or 'killer robots') pose dangers to humans, and swift diplomatic action is needed to stop their development.

The panelists were quick to distinguish an autonomous weapon from something like a drone, which is unmanned but ultimately controlled by a human.

Autonomous weapons, which are currently being developed by the US, UK, China, Israel, South Korea and Russia, will be capable of identifying targets, adjusting their behaviour in response to that target, and ultimately firing - all without human intervention.

Sir Carr, the weapons industry representative, said weapons like this would be "devoid of responsibility" and would have "no emotion or sense of mercy."

"If you remove ethics and judgement and morality from human endeavour whether it is in peace or war, you will take humanity to another level which is beyond our comprehension," he said.

The discussion came after more than 3,000 experts from the worlds of science and robotics signed an open letter calling for a ban on 'killer robots'.

Famed physicist Stephen Hawking and technologist Elon Musk were among the signatories to the letter, which was authored by the CSKR.

Currently, the CSKR is lobbying to get the issue of autonomous weapons on the table of the UN Convention on Certain Conventional Weapons, which has previously banned the use of weapons like landmines, booby traps and blinding laser weapons in warfare.

Taking the issue to Davos, where around 2,500 of the world's top business leaders, politicians and intellectuals meet every year, should help their campaign gain more traction among the world's decision makers.

It's not yet possible to build truly 'intelligent' autonomous robots, so the weaponisation of them is still far away. However, some nations already use semi-robotic weapons in their militaries.

South Korea has a network of automatic sentry guns along the border with North Korea, which use cameras and heat sensors to detect and track humans all by themselves. However, they still need a human to tell them to fire.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments