US army develops new tool to detect deepfakes threatening national security

Scientists believe advance may help build software that gives automatic warning when fake videos are played on phone

US Army scientists have developed a novel tool that can help soldiers detect deepfakes that pose threat to national security.

The advance could lead to a mobile software that warns people when fake videos are played on the phone.

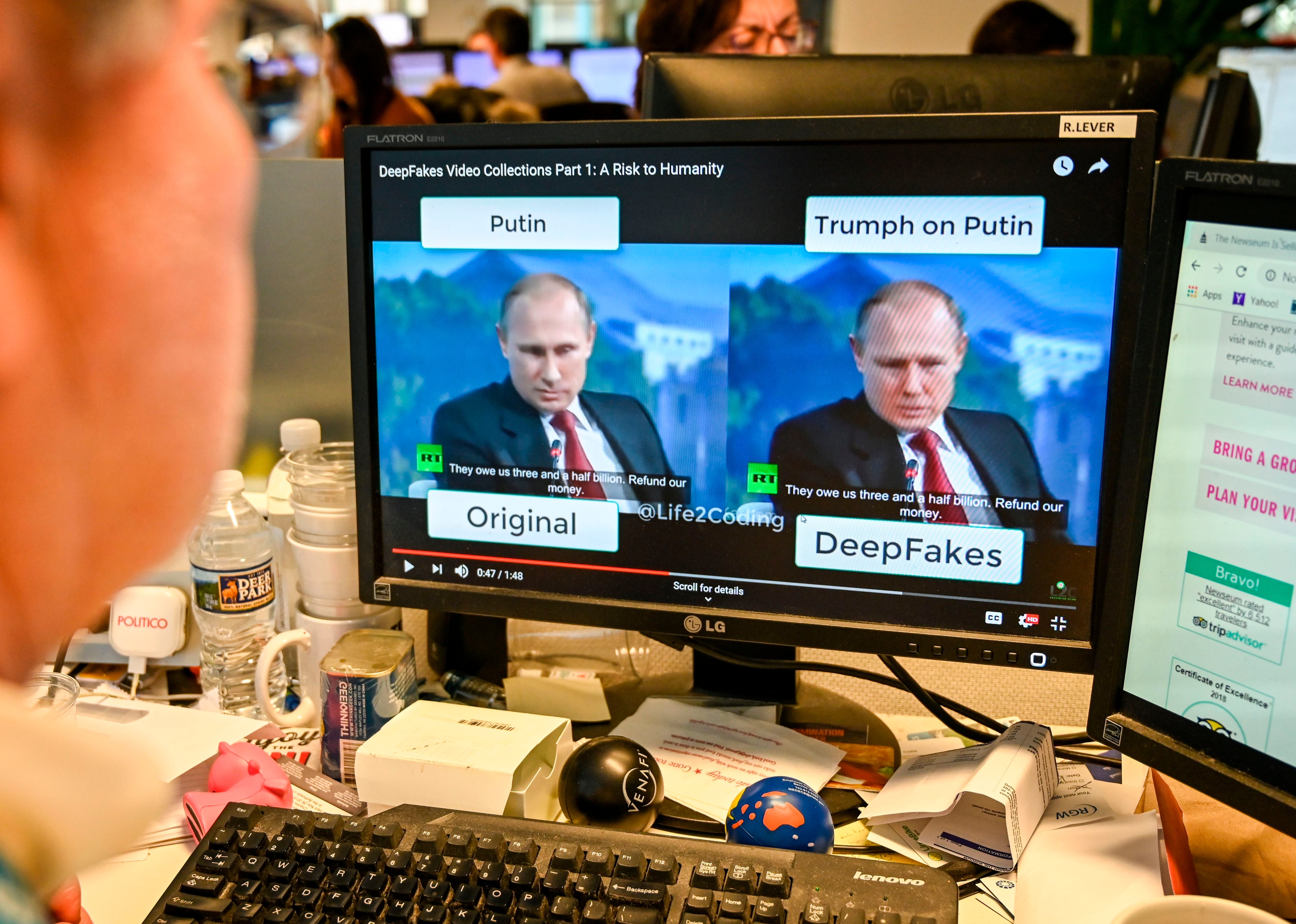

Deepfakes are hyper-realistic video content made using artificial intelligence tools that falsely depicts individuals saying or doing something, explained Suya You and Shuowen (Sean) Hu from the Army Research Laboratory in the US.

The growing number of these fake videos in circulation can be harmful to society – from the creation of non-consensual explicit content to doctored media by foreign adversaries that are used in disinformation campaigns.

According to the scientists, while there were close to 8,000 of these deepfake video clips online at the beginning of 2019, in just about nine months, this number nearly doubled to about 15,000.

Most of the currently used state-of-the-art deepfake video detection methods are based upon complex machine learning tools that lack robustness, scalability and portability – features soldiers would need to process video messages while on the move – the researchers added.

In the current study, posted as a preprint in the repository arXiv, the researchers describe a new lightweight face-biometric tool called DefakeHop, which meets the size, weight, and power requirements soldiers have.

“Due to the progression of generative neural networks, AI-driven deepfake advances so rapidly that there is a scarcity of reliable techniques to detect and defend against deepfakes,” Mr You said in a statement.

“There is an urgent need for an alternative paradigm that can understand the mechanism behind the startling performance of deepfakes and develop effective defence solutions with solid theoretical support,” he added.

The scientists said they developed the new framework by combining principles from machine learning, signal analysis, and computer vision.

One of the main advantages of the new method, according to the researchers, is that their AI tool needs fewer training samples, learns faster from the sample data, and can offer high detection performance of fake videos.

“It is a complete data-driven unsupervised framework, offers a brand new tool for image processing and understanding tasks such as face biometrics,” said Jay Kuo, a co-author of the study from University of Southern California in Los Angeles.

The scientists hope that their new tool would lead to a “software solution that runs easily on mobile devices to provide automatic warning messages to people when fake videos are played.”

“The developed solution has quite a few desired characteristics, including a small model size, requiring limited training data, with low training complexity and capable of processing low-resolution input images. This can lead to game-changing solutions with far reaching applications to the future Army,” the scientists added in the study.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks