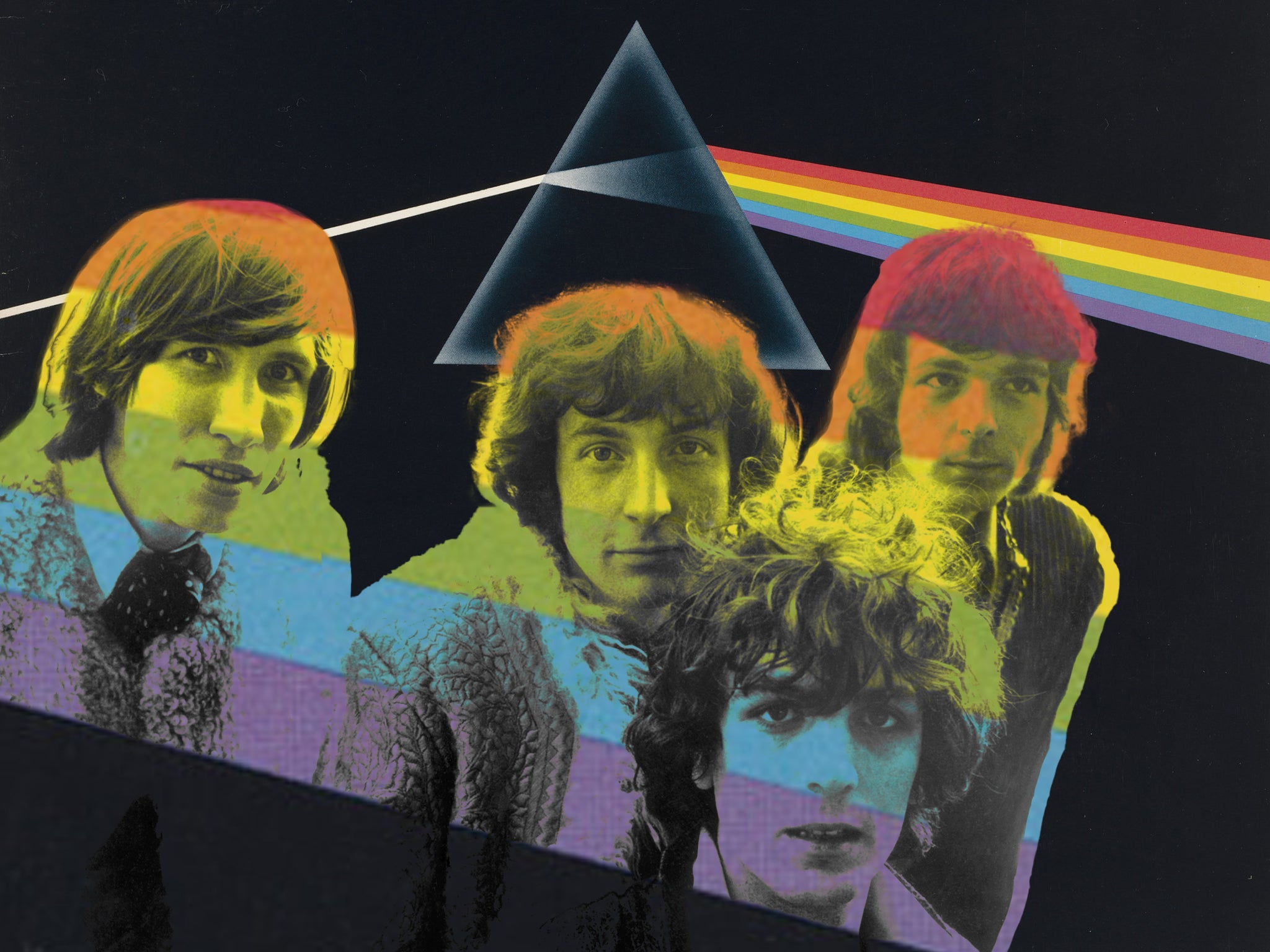

Pink Floyd song reconstructed from person’s brain activity

Scientists could decipher the line ‘All in all it’s just another brick in the wall’

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Neuroscientists have figured out how to reconstruct a song by decoding the brain signals of someone listening to it.

A team from the University of California, Berkeley, reproduced Pink Floyd’s song ‘Another Brick in the Wall, Part 1’, after placing electrodes on the brains of patients and playing the music as they underwent epilepsy surgery.

Analysis of the brain activity allowed the neuroscientists to create the song’s rhythm, as well as pick out understandable lines like “All in all it’s just another brick in the wall”.

Scientists have previously used similar brain-reading techniques in an attempt to decipher speech from thoughts, but this is the first ever time that a recognisable song has been reconstructed from brain recordings.

“It’s a wonderful result. One of the things for me about music is it has prosody and emotional content. As this whole field of brain machine interfaces progresses, this gives you a way to add musicality to future brain implants for people who need it, someone who’s got ALS or some other disabling neurological or developmental disorder compromising speech output,” said Robert Knight, a neurologist and UC Berkeley professor of psychology in the Helen Wills Neuroscience Institute who conducted the research.

“It gives you an ability to decode not only the linguistic content, but some of the prosodic content of speech, some of the affect. I think that’s what we’ve really begun to crack the code on.”

It is a significant development for brain-computer interface technology, which aims to connect humans to machines in order to fix neurological disorders or even add new abilities.

Elon Musk claims that future versions of his Neuralink device will allow wearers to stream music directly to their brain, as well as cure depression and addiction by “retraining” certain parts of the brain.

The scientists behind the latest research claim that advances in brain recording techniques could soon allow them to make detailed recordings using non-invasive techniques like ultra-sensitive electrodes attached to the scalp.

“Non-invasive techniques are just not accurate enough today,” said postdoctoral fellow Ludovic Bellier, who was part of the research team.

“Let’s hope, for patients, that in the future we could, from just electrodes placed outside on the skull, read activity from deeper regions of the brain with a good signal quality. But we are far from there.”

The research was detailed in a study, titled ‘Music can be reconstructed from human auditory cortex activity using nonlinear decoding models’, published in the scientific journal PLoS Biology.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments