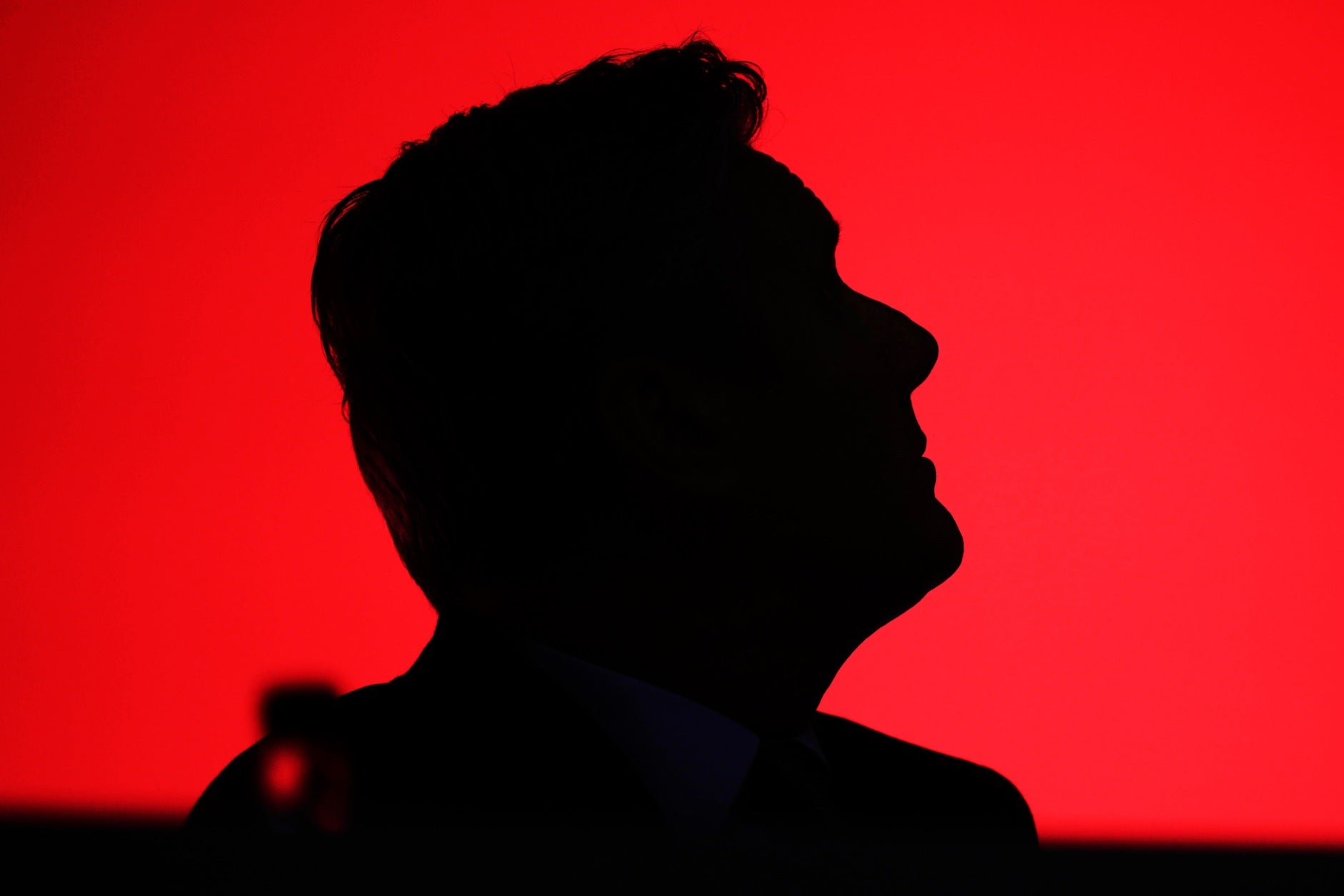

Keir Starmer deepfake shows alarming AI fears are already here

As politicans find themselves targeted by advances in deepfakes, the pubilc will have to be on guard not to get duped, writes Anthony Cuthbertson. But given they are so convincing, is it time for a tech solution?

Just a week after Slovakia scrambled to deal with an AI-generated recording designed to influence national elections, the UK Labour Party has become the target of deepfake audio. “I’m sick of this,” one of the clips, which appeared to be from a secret recording of party leader Keir Starmer, stated. “I f***ing hate Liverpool.”

Despite being debunked and deleted from some platforms, the fake recordings still received millions of views across social media, and highlighted what Tory MP Simon Clarke described as “a new threat to democracy”.

In Slovakia, the purported audio of candidate Michal Simecka plotting to rig the election emerged just two days before it took place – potentially contributing to his loss to his pro-Russia, populist opponent Robert Fico. Unlike Simecka, Starmer isn’t facing an imminent election, however in both cases the AI-generated audio appeared convincing enough to fool people into thinking they were real.

Using deepfake audio, images or video to manipulate voters is an issue that artificial intelligence experts have warned about for years, but these demonstrations show that it is no longer a forecast but a reality.

Before this year, they were easy to spot. In March 2022, just weeks into Russia’s invasion of Ukraine, a deepfake video of Ukrainian President Volodomyr Zelensky calling on his soldiers to surrender spread across social media. A digitally-infused voice and disconcerting head bobbing would have made its fakeness seem comical if its intentions were not so sinister.

Since then, advances with generative AI tools have seen increasingly deceptive depictions of public figures successfully trick people. Most have been harmless, like the alleged photo of Pope Francis wearing a white puffer coat. Some, like the viral pictures of Trump being arrested, were even created with the explicit intention of showing how advanced the tools have become, and yet were still able to fool people when shared without context.

Freely available software like Stable Diffusion and DALL-E – built by ChatGPT creator OpenAI – mean anyone can create realistic images with just a simple text prompt. And while the more established companies have rules in place that attempt to prevent misuse, there are plenty of others that allow users to be limited only by their imagination.

With US elections looming next year, and UK elections set to take place by January 2025, the latest examples show there is an urgent need to address the issue, either through greater detection tools or improved awareness of the issue.

A study published this month by comparison site Finder.com found that only 1.5 per cent of people surveyed in the UK could correctly identify deepfake clips of celebrities and politicians. The 2,000-person study revealed that participants not only thought that fake videos were real, but also labelled real videos as fake. (You can see how you fare here.)

The realism of deepfakes mean people are incresingly forced to question everything they see and hear. This unprecedented level of distrust inevitably leads to conspiracists questioning the truthful version of events.

Shortly after the Starmer audio was debunked, users of X/Twitter were using AI detection tools in an effort to prove that it wasn’t a deepfake afterall. “The supposedly deepfake ‘Starmer audio’ has now been run through an AI checker and has a 91.44 per cent probability of being a human voice, rather than AI generated,” a user by the handle @LeicesterWorker wrote. “This should be investigated.”

It is one of the issues set to be on the agenda at the world’s first AI summit in the UK in November, where representatives from all the major tech firms will meet with lawmakers to discuss potential policies to prevent the misuse and dissemination of AI. With other technological tools like troll farms and bots proving a menace during the last US elections, the addition of ultra-realistic deepfakes could provoke chaos if effective mitigation measures are not implemented in time.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks