Why Apple is working hard to break into its own iPhones

In a secret location in Paris, Apple has hired an elite team of laser-wielding hackers to try and crack its iPhones. Andrew Griffin gets an inside look

Last summer, Apple’s iPhones got a new feature that it hopes you never need to use and which mostly makes them harder to use. Named Lockdown Mode, Apple stresses that it is not for everyone, calling it an “optional, extreme protection” aimed at “very few individuals” that will be irrelevant to most people.

Many people will never know that the feature exists. But it is just one part of a range of features that Apple and other companies have been forced to add to their devices as phones and other personal devices become an increasingly important part of geopolitics. Lockdown Mode is just one part of Apple’s response: it sits alongside other security features as well as detailed security work that aims to stop people breaking into its devices.

That work has largely been done quietly, with Apple focusing much more on its privacy work than on security. But recently it opened up on some of that work, as well as the thinking that led Apple to put so much focus onto a set of features that nobody ever wants to use.

Some of that work is happening now in Paris. The city has a long history of work on security technology – including work on smart cards that saw the early widespread introduction of secure debit cards in France – but the activity at Apple’s facilities in the city is looking far ahead, towards iPhones and other devices that are secret for now and will not appear for years.

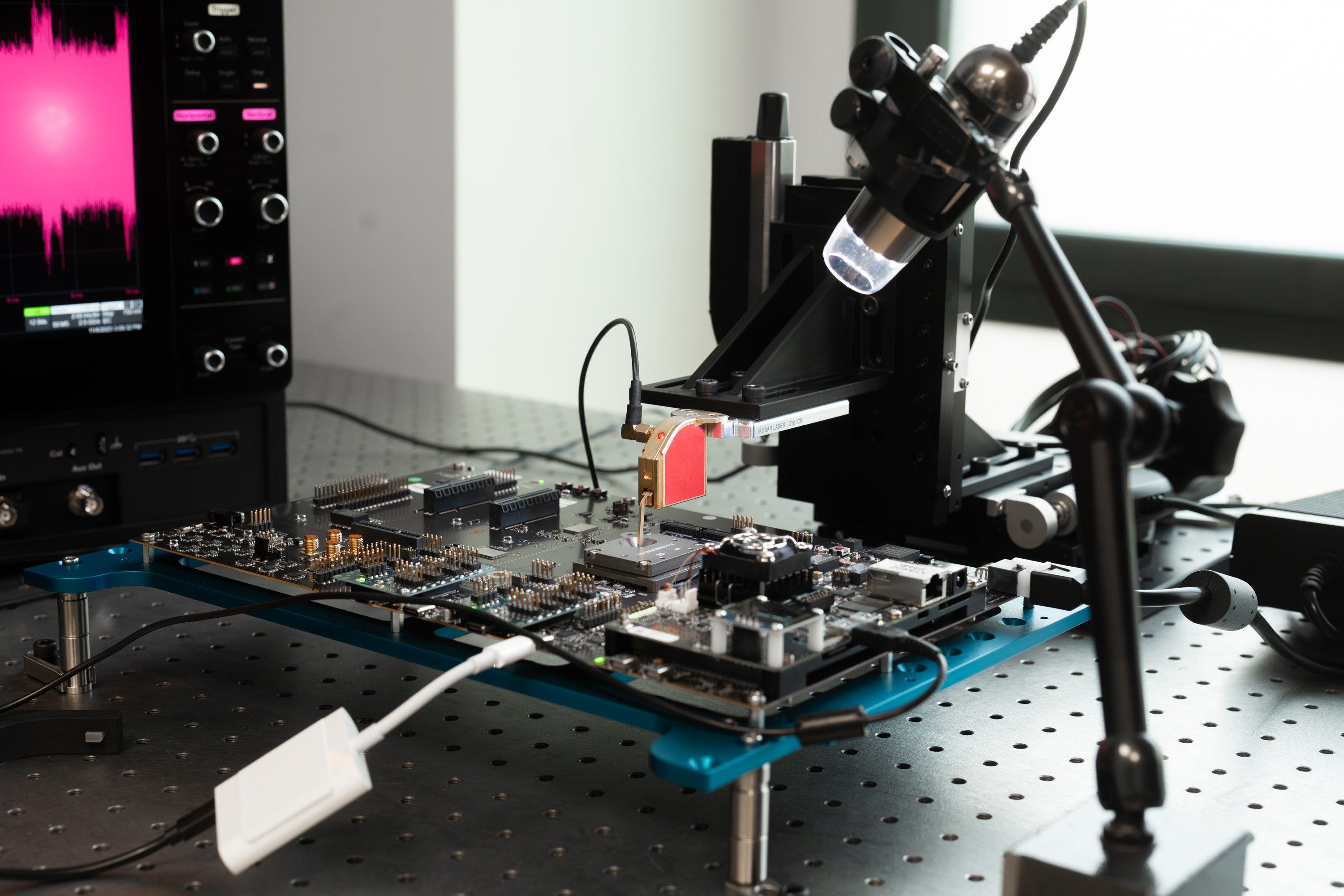

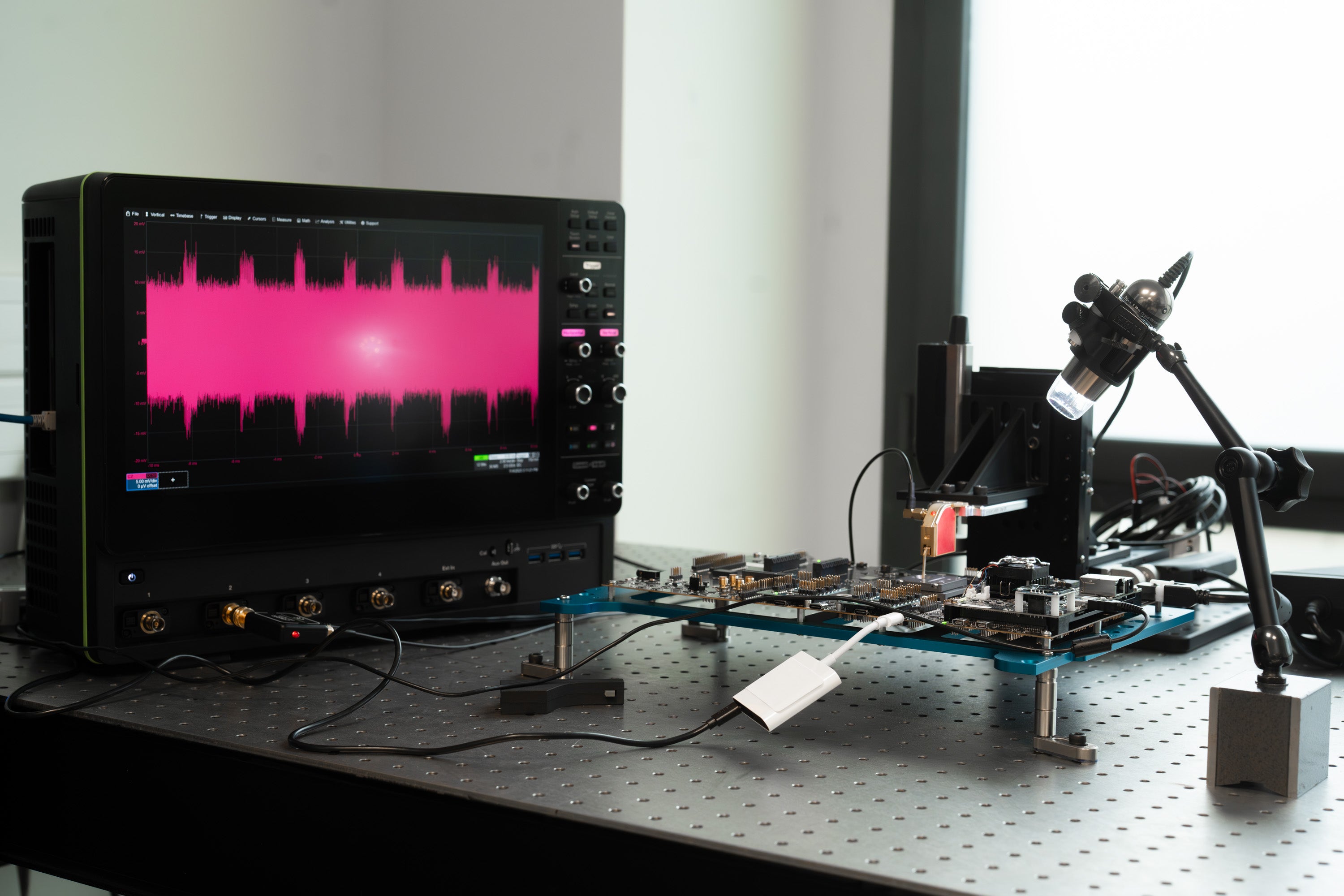

As part of that work in Paris, Apple’s engineers are working hard to break its phones. Using a vast array of technology including lasers are finely tuned sensors, they are trying to find gaps in their security and patch them up before they even arrive in the world.

Unlike with software, where even significant security holes can be fixed relatively simply with a security update, hardware is out of Apple’s hands once a customer buys it. That means that it must be tested years in advance with every possible weakness probed and fixed up before the chip even makes it anywhere near production.

Apple’s chips have to be relied upon to encrypt secure data so that it cannot be read by anyone else, for instance; pictures need to be scrambled before they are sent up to be backed up on iCloud, for instance, to ensure that an attacker could not grab them as they are transferred. That requires using detailed and complicated mathematical work to make the pictures meaningless without the encryption key that will unlock them.

There are various ways that process might be broken, however. The actual chip doing the encryption can show signs of what it is doing: while processors might seem like abstract electronics, they throw out all sorts of heats and signals that could be useful to an attacker. If you asked someone to keep a secret number in their head and let you try and guess it, for instance, you might tell them to multiply the number by two and see how long it takes and how hard they are thinking; if it’s a long time, it suggests the number might be especially big. the same principle is true of a chip, it’s just that the signs are a little different.

And so Apple gets those chips and probes them, blasts them with precisely targeted lasers, heats them up and cools them down, and much more besides. The engineers in its Paris facilities doing this work are perhaps the most highly capable and well resourced hackers of Apple’s products in the world; they just happen to be doing it to stop everyone else doing the same. If they find something, that information will be distributed to colleagues who will then work to patch it up. Then the cycle starts all over again.

It is complicated and expensive work. But they are up against highly compensated hackers: in recent years, there has grown up to be an advanced set of companies offering cyber weapons to the highest bidder, primarily for use against people working to better the world: human rights activists, journalists, diplomats. No piece of software better exemplifies the vast resources that are spent in this shadow industry than Pegasus, a highly targeted piece of spyware that is used to hack phones and surveil their users, though it has a host of competitors.

Pegasus has been around since at least 2016, and since then Apple has been involved in a long and complicated game of trying to shut down to the holes it might exploit before attackers find and market another one. Just as with other technology companies, Apple works to secure devices against more traditional attacks, such as stolen passwords and false websites. But Pegasus is an entirely different kind of threat, targeted at specific people and so expensive that it would only be used in high-grade attacks. Fighting it means matching its complexity.

It’s from that kind of threat that Lockdown Mode was born, though Apple does not explicitly name Pegasus in its materials. It works by switching off parts of the system, which means that users are explicitly warned when switching it on that they should only do so with good reason, since it severely restricts the way the phone works; FaceTime calls from strangers will be blocked, for instance, and so will most message attachments.

But Lockdown Mode is not alone. Recent years have seen Apple increase the rewards in its bug bounty programme, through which it pays security researchers for finding bugs in its software, after it faced sustained criticism over its relatively small payouts. And work on hardware technologies such as encryption – and testing it in facilities such as those in Paris – mean that Apple is attempting to build a phone that is safe from attacks in both hardware and software.

Apple says that work is succeeding, believing it is years ahead of its hackers and proud of the fact that it has held off attacks without forcing its users to work harder to secure their devices or compromising on features. But recent years have also seen it locked in an escalating battle: Lockdown Mode might have been a breakthrough of which it is proud, but it was only needed because of an unfortunate campaign to break into people’s phones. Ivan Krstić, Apple’s head of security engineering and architecture, says that is partly just a consequence of the increasing proliferation of technology.

“I think what’s happening is that that there are more and more avenues of attack. And that’s partly a function of wider and wider deployment of technology. More and more technology is being used in more and more scenarios.,” says Krstić, pointing not only to personal devices such as phones but also to industry and critical infrastructure. “That is creating more opportunity for more attackers to come forward to develop some expertise to pick a niche that they want to spend their time attacking.

“There was a time that that I still well remember when data breaches were seemingly not a wide problem. But of course, they have exploded over the last 10 years or so – more than tripled, between 2013 and 2021. In 2021, the number of personal records breached 1.1 billion personal records.

“During the same amount of time a number of other attackers have been pursuing new kinds of attack, or different kinds of attacks – against devices, against Internet of Things devices, against really anything that is that is connected in in some way to the internet.

“And I think in a lot of these cases, attackers were will go where there is money to be made or some other benefits to be obtained and the nature of the fight for security is to keep pushing the defences forward to keep trying to stay one step ahead of not just where the attacks are today, but also where they’re going.”

Apple doesn’t reveal exactly how much of its money is spent on security work. But it must be significant, both in terms of raw money as well as the extra thought and design required on any given device. What’s the justification for investing so many resources on ensuring that a very small number of people are protected from the most advanced attacks?

“There are two,” says Krstić. “One is that attacks that are the most sophisticated attacks today may over time start to percolate down and become more widely available. Being able to understand what the absolute most sophisticated most grave threats look like today lets us build defences before any of that has a chance to percolate down and become more widely available. But I think that’s the smaller of the two reasons.

“When we look at how some of this state grade mercenary spyware is being abused, the kinds of people being hit with it – it’s journalists, diplomats, people fighting to make the world a better place. And we think it’s wrong for this kind of spyware to be abused in this way. We think that that those users deserve trustworthy, safe technology, and the ability to communicate safely and freely, just as all our other users.

“So this was, for us, not a business decision. It was… doing what’s right.”

Apple’s focus on security places it into a difficult geopolitical situation of the kind it has often studiously avoided. Late last month, for instance, Indian opposition leaders started receiving threat notifications warning them that their devices might be attacked. Neither the notifications or Apple more generally named who was doing it, and Apple says that the warnings could be a false alarm – but nonetheless the Indian government pushed back, launching a probe of the security of Apple’s devices.

It is not the kind of difficulty that comes even with other security work; those stealing passwords or scamming people out of money don’t have lobbyists and government power. The kind of highly targeted, advanced attacks that Lockdown Mode and other features guard against however are costly and complicated, meaning they will often be done by governments that could cause difficulties for Apple and other technology companies. How is Apple guided in situations where it could potentially be up against governments and other powerful agencies?

“We do not see ourselves as set against governments,” says Krstić. “That is not what any of this work is about. But we do see ourselves as having a duty to defend our users from threats, whether common or in some cases, truly grave.

He declines to give precise details about how the company has dealt with those difficulties in the past. “But I think when you look at what’s been driving it, when you look at these cases that I’ve pointed to and when you look at what the response has been to the defences we built and how we’ve been able to protect some of these users, we feel very strongly like we’re doing the right thing.”

Threat notifications are not the only part of Apple’s security work that have caused issues with authorities. Another much larger debate is coming, and might potentially bring a much more substantial change.

The European Union’s recently signed Digital Markets Act requires that what it calls gatekeepers – Apple and other operators of app stores – must allow for sideloading, or letting people put apps on their phones from outside of those App Stores. At the moment, iPhones can only download and run apps downloaded from the official store; Apple says that is an important protection, but critics argue that it gives it too much power over the device.

The introduction of sideloading is just one of the many controversial parts of the Digital Markets Act. But if it goes through as planned, the company will be forced to let people head to a website and download a third-party app, without standing in the way. The European Commission has made very clear that it believes that is required for fair competition, and that it thinks that will give users more choice about what apps they use and how they get them.

Krstić does not agree, and Apple has been explicit in its opposition to sideloading. The idea that people are being given an extra choice – including the choice of sticking with the App Store and keeping its protections – is a false one, he says with some frustration.

“That’s a great misunderstanding – and one we have tried to explain over and over. The reality of what the alternative distribution requirements enable is that software that users in Europe need to use – sometimes business software, other times personal software, social software, things that they want to use – may only be available outside of the store, alternatively distributed.

“In that case, those users don’t have a choice to get that software from a distribution mechanism that they trust. And so, in fact, it is simply not the case that users will retain the choice they have today to get all of their software from the App Store.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments