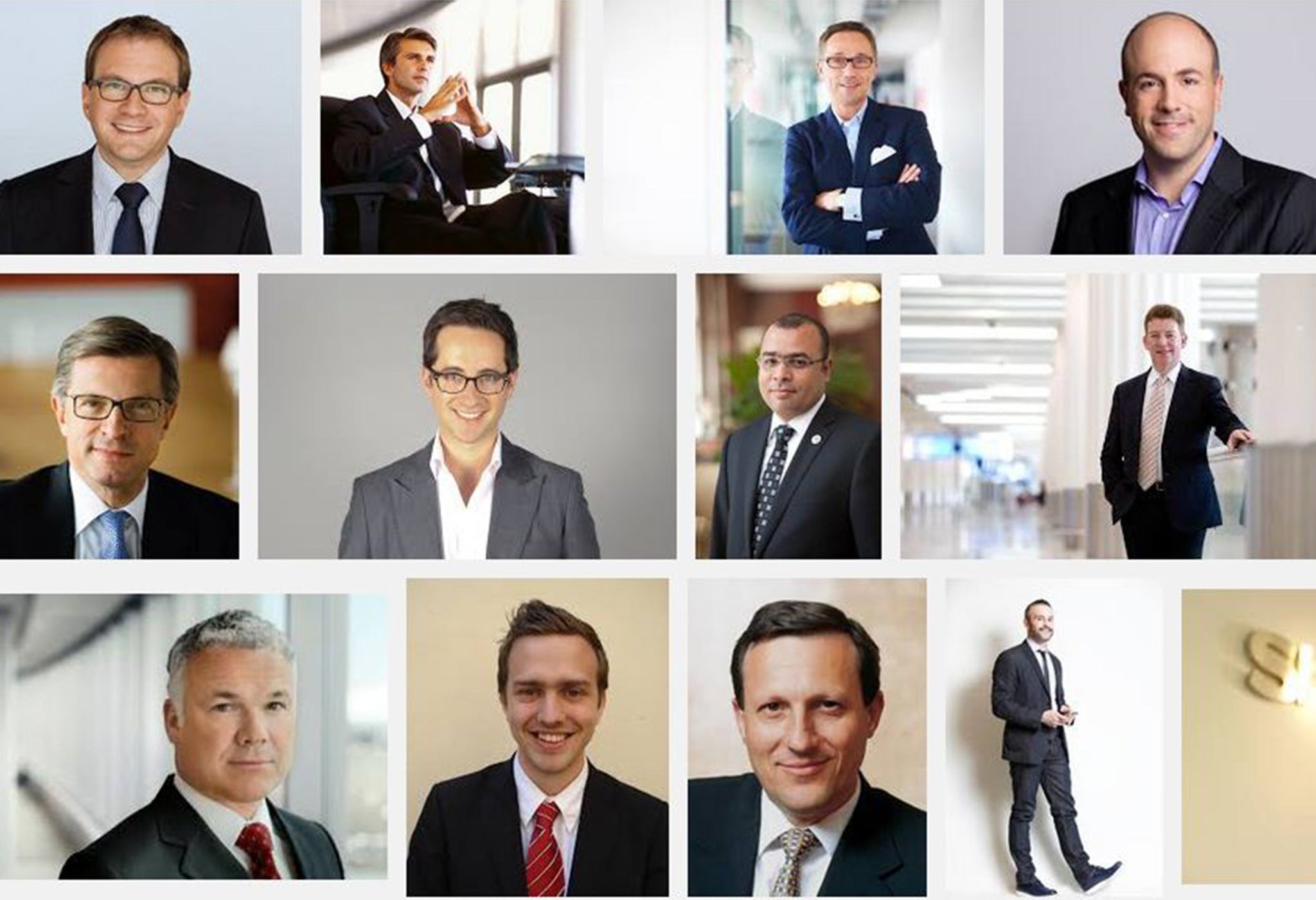

Google's algorithm shows prestigious job ads to men, but not to women

This isn’t the first time that algorithm systems have appeared to be sexist

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Fresh off the revelation that Google image searches for “CEO” only turn up pictures of white men, there’s new evidence that algorithmic bias is, alas, at it again. In a paper published in April, a team of researchers from Carnegie Mellon University claim Google displays far fewer ads for high-paying executive jobs…

… if you’re a woman.

“I think our findings suggest that there are parts of the ad ecosystem where kinds of discrimination are beginning to emerge and there is a lack of transparency,” Carnegie Mellon professor Annupam Datta told Technology Review. “This is concerning from a societal standpoint.”

To come to those conclusions, Datta and his colleagues basically built a tool, called Ad Fisher, that tracks how user behavior on Google influences the personalized Google ads that each user sees. Because that relationship is complicated and based on a lot of factors, the researchers used a series of fake accounts: theoretical job-seekers whose behavior they could track closely.

That online behavior — visiting job sites and nothing else — was the same for all the fake accounts. But some listed their sex as men and some as women.

The Ad Fisher team found that when Google presumed users to be male job seekers, they were much more likely to be shown ads for high-paying executive jobs. Google showed the ads 1,852 times to the male group — but just 318 times to the female group.

This isn’t the first time that algorithm systems have appeared to be sexist — or racist, for that matter. When Flickr debuted image recognition tools in May, users noticed the tool sometimes tagged black people as “apes” or “animals.” A landmark study at Harvard previously found serious discrimination in online ad delivery, like when searching ethnic names on Google turned up more results around arrest records. Algorithms have hired by voice inflection. The list goes on and on.

But how much of this is us and how much of this is baked into the algorithm? It’s a question that a lot of people are struggling to answer.

After all, algorithmic personalization systems, like the ones behind Google’s ad platform, don’t operate in a vacuum: They’re programmed by humans and taught to learn from user behavior. So the more we click or search or generally Internet in sexist, racist ways, the algorithms learn to generate those results and ads (supposedly the results we would expect to see).

“It’s part of a cycle: How people perceive things affects the search results, which affect how people perceive things,” Cynthia Matuszek, a computer ethics professor at University of Maryland and co-author of a study on gender bias in Google image search results, told The Washington Post in April.

Google cautions that some other things could be going on here, too. The advertiser in question could have specified that the ad only been shown to certain users for a whole host of reasons, or the advertiser could have specified that the ad only show on certain third-party sites.

“Advertisers can choose to target the audience they want to reach, and we have policies that guide the type of interest-based ads that are allowed,” reads a statement from Google.

The interesting thing about the fake users in the Ad Fisher study, however, is that they had entirely fresh search histories: In fact, the accounts used were more or less identical, except for their listed gender identity. That would seem to indicate either that advertisers are requesting that high-paying job ads only display to men (and that Google is honoring that request) or that some type of bias has been programmed, if inadvertently, into Google’s ad-personalization system.

In either case, Datta, the Carnegie Mellon researcher, says there’s room for much more scholarship, and scrutiny, here.

“Many important decisions in society these days are being made by algorithms,” he said. “These algorithms run inside of boxes that we don’t have access to the internal details of. The genesis of this project was that we wanted to peek inside this box a little to see if there are more undesirable consequences of this activity going on.”

©Washington Post

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments