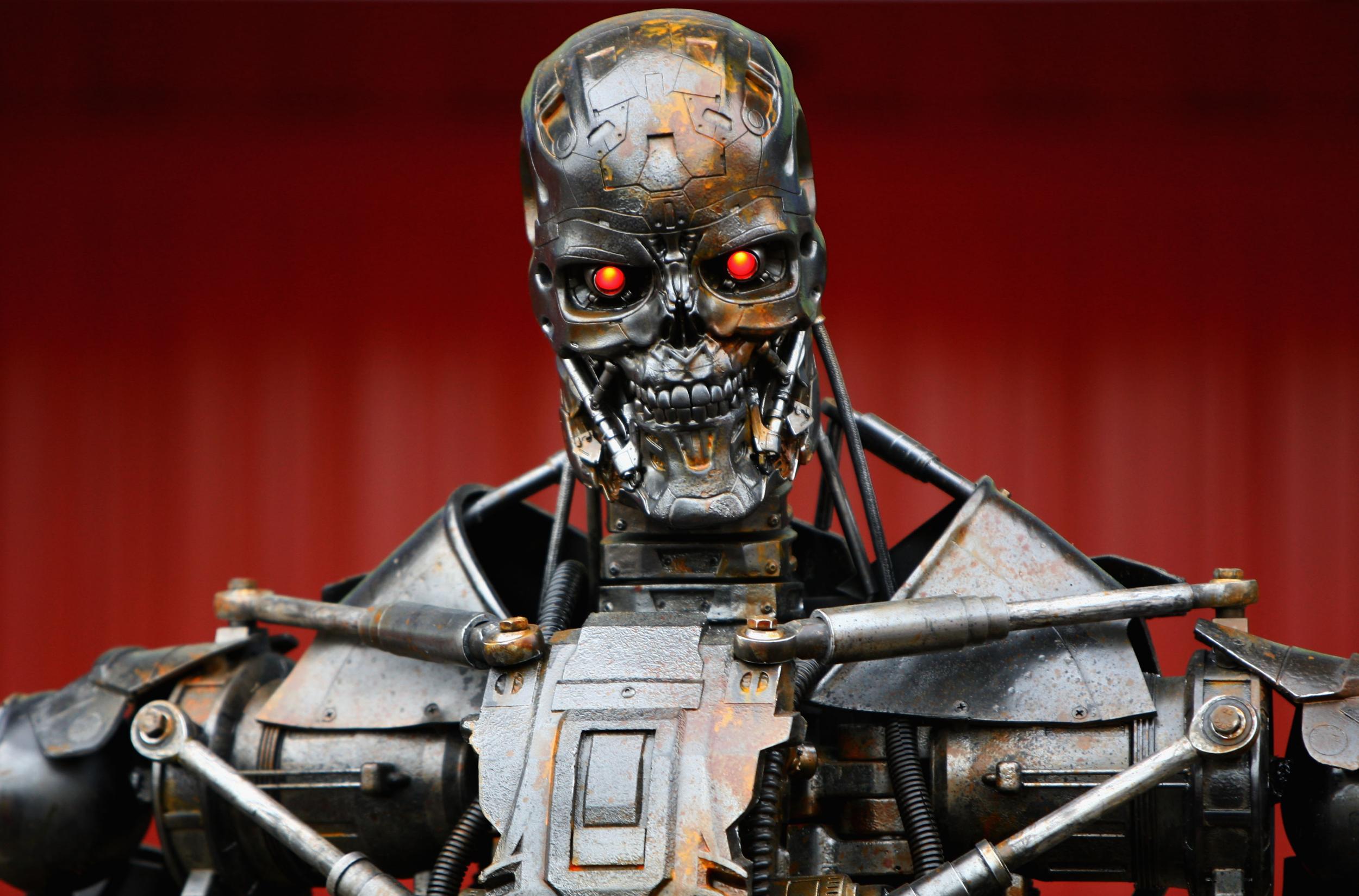

Facebook teaches bots to appear more human-like

'Small variations in expression can be very informative'

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Facebook is teaching robots to appear more human-like.

The company’s AI lab has developed a bot that can analyse the facial expressions of the person it’s interacting with, and adjust its own appropriately.

It’s controlled by a deep neural network, which watched 250 video recordings of two-person Skype conversations – where both faces were displayed side-by-side – as part of its training.

The researchers identified 68 “facial landmarks” that the system monitored, in order to detect subtle responses and micro-expressions.

“Even though the appearances of individuals in our dataset differ, their expressions share similarities which can be extracted from the configuration of their facial landmarks,” they explained in a paper spotted by New Scientist.

“For example, when people cringe the configuration of their eyebrows and mouth is most revealing about their emotional state.

“Indeed, small variations in expression can be very informative.”

They then tested the bot on a group of humans.

They were asked to “look at how the [bot’s] facial expressions are reacting to the user’s, particularly whether it seemed natural, appropriate and socially typical”, and decide on whether or not it seemed to be engaged in a conversation.

The bot passed the test, with the judges considering is facial expressions to look “natural and consistent” and “qualitatively realistic”.

“Interactive agents are becoming increasingly common in many application domains, such as education, health-care and personal assistance,” said the researchers.

“The success of such embodied agents relies on their ability to have sustained engagement with their human users. Such engagement requires agents to be socially intelligent, equipped with the ability to understand and reciprocate both verbal and non-verbal cues.”

They say the natural next step is to make the bot interact with a real human in the real-world.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments