The Independent's journalism is supported by our readers. When you purchase through links on our site, we may earn commission.

Tom Cruise deepfake maker reveals how he did it, raising fears that colleagues in video calls may be criminals

‘This went from being cool to starting to scare me,’ says one TikTok user

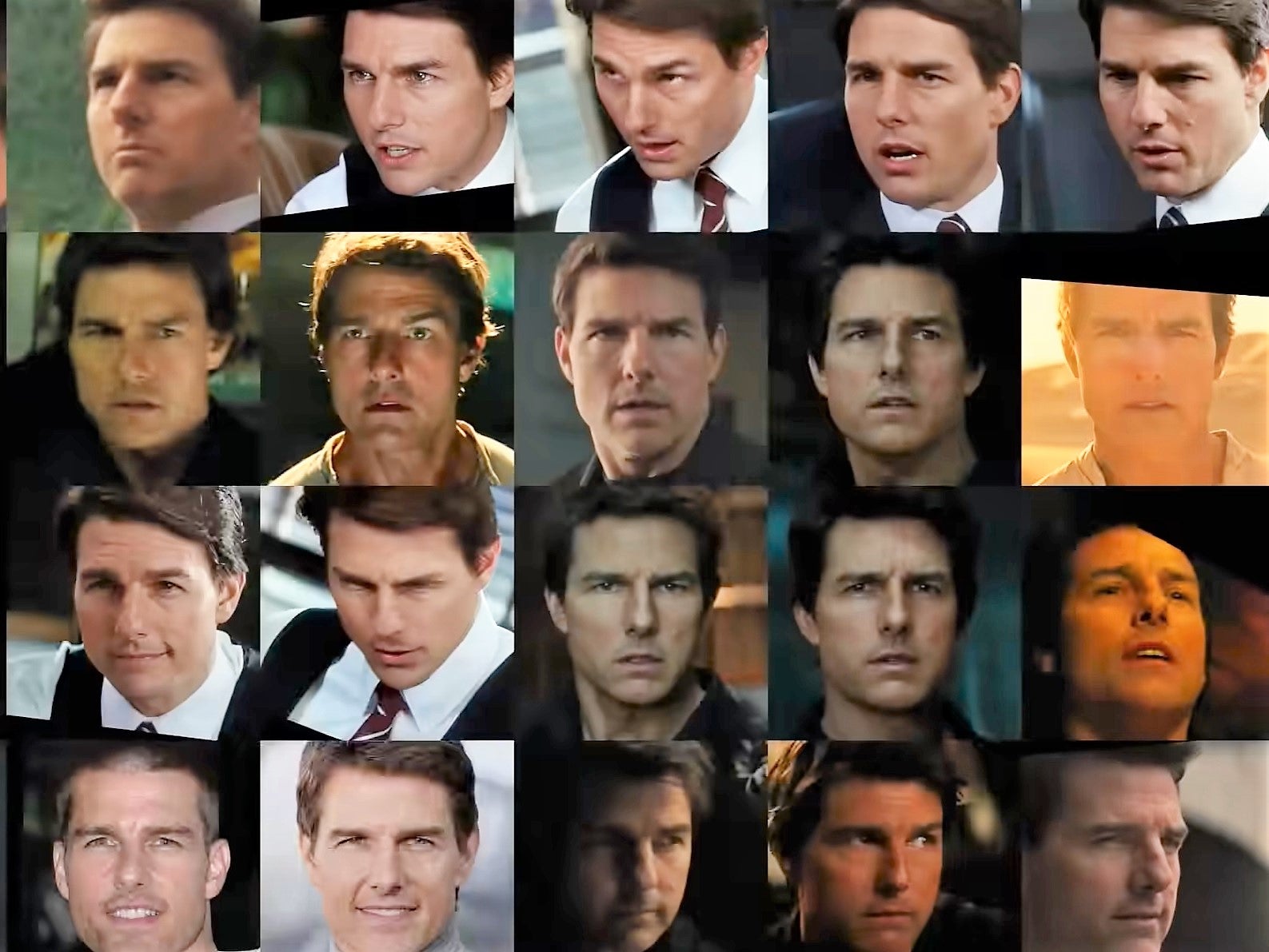

A viral video series appearing to show actor Tom Cruise playing golf and performing magic tricks has raised concerns about the potential of deepfake technology to spread conspiracy theories and disinformation online.

The Cruise clips are so realistic that they initially fooled some people into thinking that the Hollywood actor had joined TikTok, with the account gaining hundreds of thousands of followers after posting just a few videos.

Many of those who spotted that the account was fake – the channel is called @deeptomcruise – noted how far the technology has come in just a few years. One user commented: “This went from being cool to starting to scare me.”

But while deepfakes may be becoming more ubiquitous and advanced, creating such ultra-realistic videos is still far beyond the average person.

Visual effects artist Chris Ume revealed how he used a Tom Cruise impersonator combined with machine learning software in order to achieve the technological trickery.

The technology is already being used commercially in a range of industries: from company training videos in the US, to TV news reports in South Korea.

It has also been used in a number of video stunts and pranks, such as a deepfake of the Queen in Channel 4’s alternative Christmas speech, and a fake version of the Donald Trump ‘Pee Tape’.

The technology is both cost effective and relatively easy to use thanks to the emergence of companies like Synthesia that are dedicated to creating AI-generated videos for corporate clients. However, the speed at which the technology is advancing has led to concerns about malicious users gaining access to deepfake software in order to create things like dangerous propaganda or revenge porn.

Realistic deepfakes could also be used to carry out cyber attacks in the future, according to security experts.

“We see the potential of deepfakes to become a feature of enterprise attacks, amplifying existing social engineering techniques by making them appear even more credible,” Nir Chako, cyber research specialist at identity security firm CyberArk, told The Independent.

“It is not too much of a stretch for attackers to lift video and recordings of senior business leaders... and use them to generate deepfakes that act as a strategic follow-on to phishing attempts.”

Such attack methods could be exacerbated by another growing trend of remote working, which means some workers never actually meet their colleagues in real life.

“The pandemic, the resulting increase in people working from home, and higher reliance on online connectivity, combined with uncertainty among people, are likely to feed into the effectiveness of the use of deepfakes to spread disinformation,” said Petr Somol, AI research director at cyber security firm Avast.

“Deepfakes will likely reach a quality this year where they can be actively used in disinformation campaigns. Conspiracy theories about the coronavirus, such as its alleged spread via 5G, could be reemphasized via deepfake videos – for example, wrongly showing politicians as conspirators.”

Most major video-sharing platforms already have policies in place to prevent deepfakes from tricking their users, however for them to be enforced they would also need to be able to recognise that a video is fake in the first place. By the time they do, the clip may have gone viral and the damage would already be done.

TikTok’s rules, for example, state that digital forgeries will be removed if they “mislead users by distorting the truth of events and cause harm to the subject of the video, other persons or society”.

As with a lot of online content moderation, this leaves a lot of grey area for the rules to be exploited or ignored. Despite TikTok’s policies, the social media firm claims the account’s username is enough to inform users that the Tom Cruise videos are not real. Along with hundreds of others, they remain online.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks