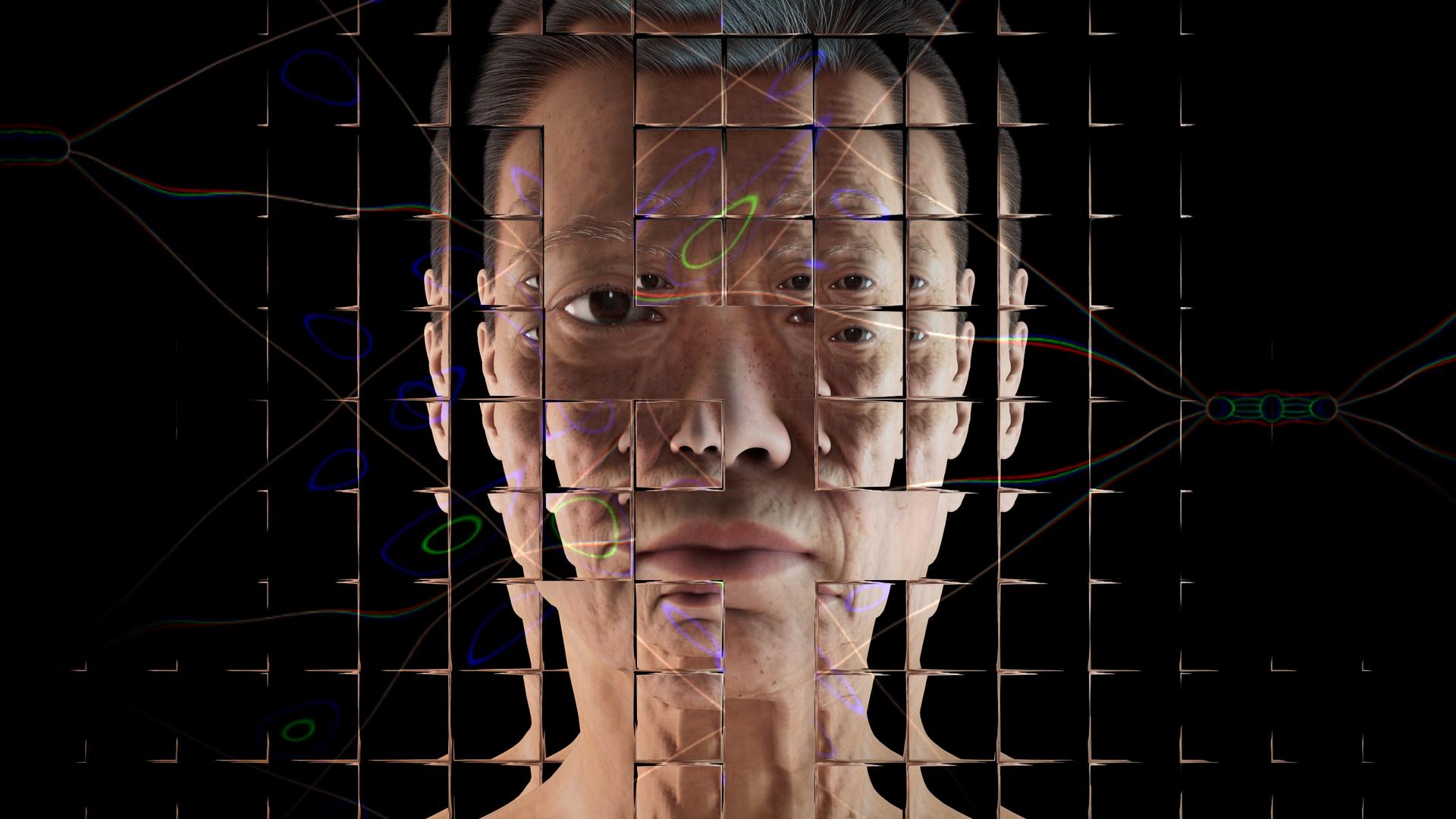

Deepfake faces are even more trustworthy than real people, study warns

The participants only had a 48 per cent chance of detecting a real deepfake from a fake one– slightly lower odds than flipping a coin

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Fake faces created by artificial intelligence appear more trustworthy to human beings than real people do, new research suggests.

The technology, known as a ‘deepfake’, is when artificial intelligence and deep learning – an algorithmic learning method used to train computers – is used to make a person that appears authentic.

Sometimes they can even be used to deliver messages that have never been said, such as a fake Barack Obama insulting Donald Trump or a manipulated video of Richard Nixon’s Apollo 11 presidential address.

Researchers conducted an experiment where participants classified faces made by the StyleGAN2 algorithm as either real or fake. The participants had a 48 per cent success rate – slightly lower than flipping a coin.

In a second experiment, participants were trained on how to detect deepfakes using the same data set, but in this instance the accuracy rate only increased to 59 per cent.

Research from the University of Oxford, Brown University, and the Royal Society supports this – showing that most people are unable to tell they are watching a deepfake video even when they are informed that the content they are watching could have been digitally altered

The researchers then tested whether perceptions of trustworthiness could help humans identify images.

“Faces provide a rich source of information, with exposure of just milliseconds sufficient to make implicit inferences about individual traits such as trustworthiness. We wondered if synthetic faces activate the same judgements of trustworthiness,” Dr Sophie Nightingale from Lancaster University and Professor Hany Farid from the University of California, Berkeley, wrote in Proceedings of the National Academy of Sciences.

“If not, then a perception of trustworthiness could help distinguish real from synthetic faces.”

Unfortunately, synthetic faces were found to be 7.7 per cent more trustworthy than real faces, with women rated more trustworthy than men.

“A smiling face is more likely to be rated as trustworthy, but 65.5 per cent of the real faces and 58.8 per cent of synthetic faces are smiling, so facial expression alone cannot explain why synthetic faces are rated as more trustworthy,” the researchers wrote.

It is suggested that these faces could be considered more trustworthy because they resemble average faces, which human beings find more trustworthy in general.

The researchers proposed that there should be guidelines for the creation and distribution of deepfakes, such as “incorporating robust watermarks” and reconsideration of the “often-laissez-faire approach to the public and unrestricted releasing of code for anyone to incorporate into any application”

Deepfake crime could be the most dangerous form of crime through artificial intelligence, a report from University College London suggested.

“People now conduct large parts of their lives online and their online activity can make and break reputations. Such an online environment, where data is property and information power, is ideally suited for exploitation by AI-based criminal activity,” said Dr Matthew Caldwell.

“Unlike many traditional crimes, crimes in the digital realm can be easily shared, repeated, and even sold, allowing criminal techniques to be marketed and for crime to be provided as a service. This means criminals may be able to outsource the more challenging aspects of their AI-based crime.”

Currently, the predominant use of deepfakes is for pornography. In June 2020, research indicated that 96 per cent of all deepfakes online are for pornographic context, and nearly 100 per cent of those cases are of women.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments