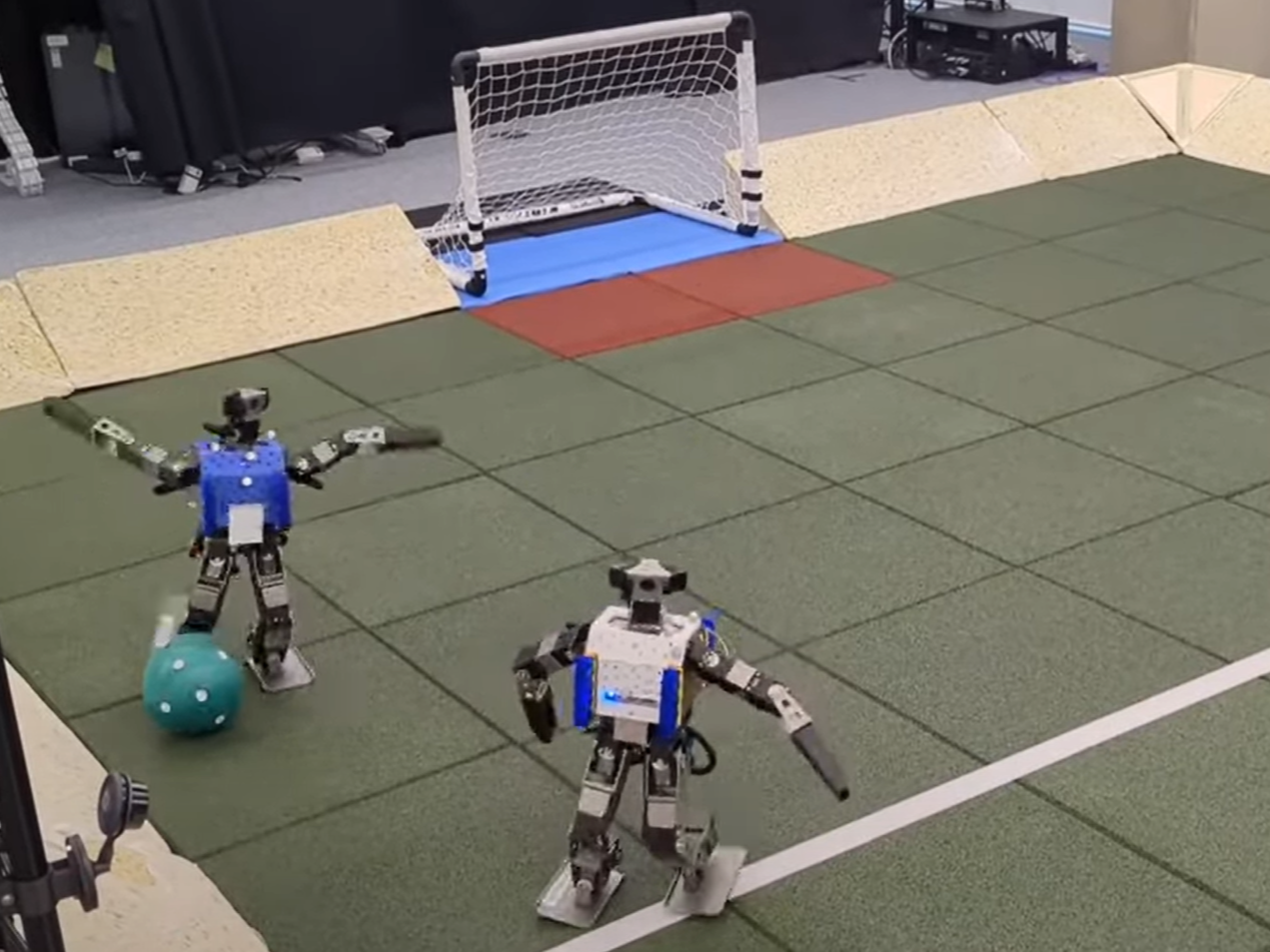

AI robots figure out how to play football in shambolic footage

DeepMind’s artificial intelligence allows bots to make tackles, score goals and easily recover from falls when tripped

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Robots fitted with AI developed by Google’s DeepMind have figured out how to play football.

The miniature humanoid robots, which are about knee height, were able to make tackles, score goals and easily recover from falls when tripped.

In order to learn how to play, AI researchers first used DeepMind’s state-of-the-art MuJoCo physics engine to train virtual versions of the robots in decades of match simulations.

The simulated robots were rewarded if their movements led to improved performance, such as winning the ball from an opponent or scoring a goal.

Once they were sufficiently capable of performing the basic skills, DeepMind researchers then transferred the AI into real-life versions of the bipedal bots, who were able to play one-on-one games of football against each other with no additional training required.

“The trained soccer players exhibit robust and dynamic movement skills, such as rapid fall recovery, walking, turning, kicking and more,” DeepMind noted in a blog post.

“The agents also developed a basic strategic understanding of the game, and learned, for instance, to anticipate ball movements and to block opponent shots.

“Although the robots are inherently fragile, minor hardware modifications, together with basic regularisation of the behaviour during training led the robots to learn safe and effective movements while still performing in a dynamic and agile way.”

A paper detailing the research, titled ‘Learning agile soccer skills for a bipedal robot with deep reinforcement learning’, is currently under peer-review.

Previous DeepMind research on football-playing AI has used different team set ups, increasing the number of players in order to teach simulated humanoids how to work as a team.

The researchers say the work will not only advance coordination between AI systems, but also offer new pathways towards building artificial general intelligence (AGI) that is of an equivalent or superiour level to humans.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments