Electronic tongue reveals ‘inner thoughts’ of AI

Researchers say device gives glimpse into mysterious decision-making process of artificial intelligence

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Scientists have developed an electronic tongue capable of discerning between different tastes better than a human.

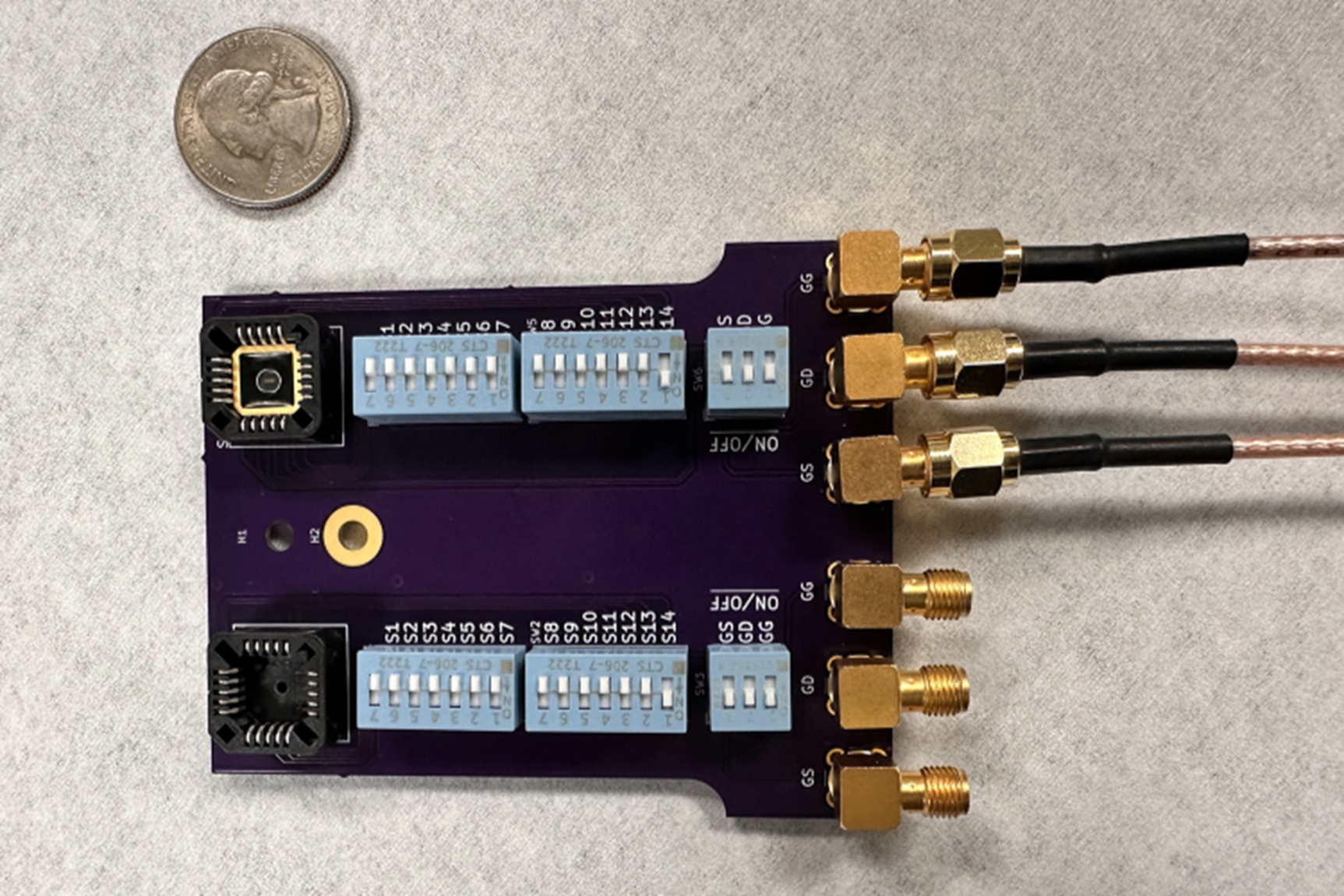

A team from Penn State in the US claims the graphene-based device holds the potential to “revolutionise” the detection of chemical and environmental changes, which could be used in everything from medical diagnostics, to spotting when food has been spoiled.

The new technology also offers unique insight into the “inner thoughts” of artificial intelligence, which has largely been an opaque field until now due to something referred to as the black box problem.

The team were able to do this by reverse engineering how the neural network came to its final determination when identifying differences between various types of milk, coffee and fizzy drinks.

This process gave the researchers “a glimpse into the neural network’s decision-making process”, which they claimed could lead to better AI safety and development.

“We’re trying to make an artificial tongue, but the process of how we experience different foods involves more than just the tongue,” said Saptarshi Das, a professor of engineering science and mechanics at Penn State.

“We have the tongue itself, consisting of taste receptors that interact with food species and send their information to the gustatory cortex — a biological neural network.”

The neural network used by the electronic tongue was able to reach a tasting accuracy of more than 95 per cent when put against human-selected parameters.

Using a method called Shapley additive explanations, the researchers could delve into the decision-making process of the neural network.

Rather than assessing individual human-assigned parameters, the neural network considered the data it determined was most important for assessing different tastes.

“We found that the network looked at more subtle characteristics in the data – things we, as humans, struggle to define properly,” Professor Das said.

“And because the neural network considers the sensor characteristics holistically, it mitigates variations that might occur day-to-day. In terms of the milk, the neural network can determine the varying water content of the milk and, in that context, determine if any indicators of degradation are meaningful enough to be considered a food safety issue.”

The research was detailed in a study, titled ‘Robust chemical analysis with graphene chemosensors and machine learning’, published in the journal Nature.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments