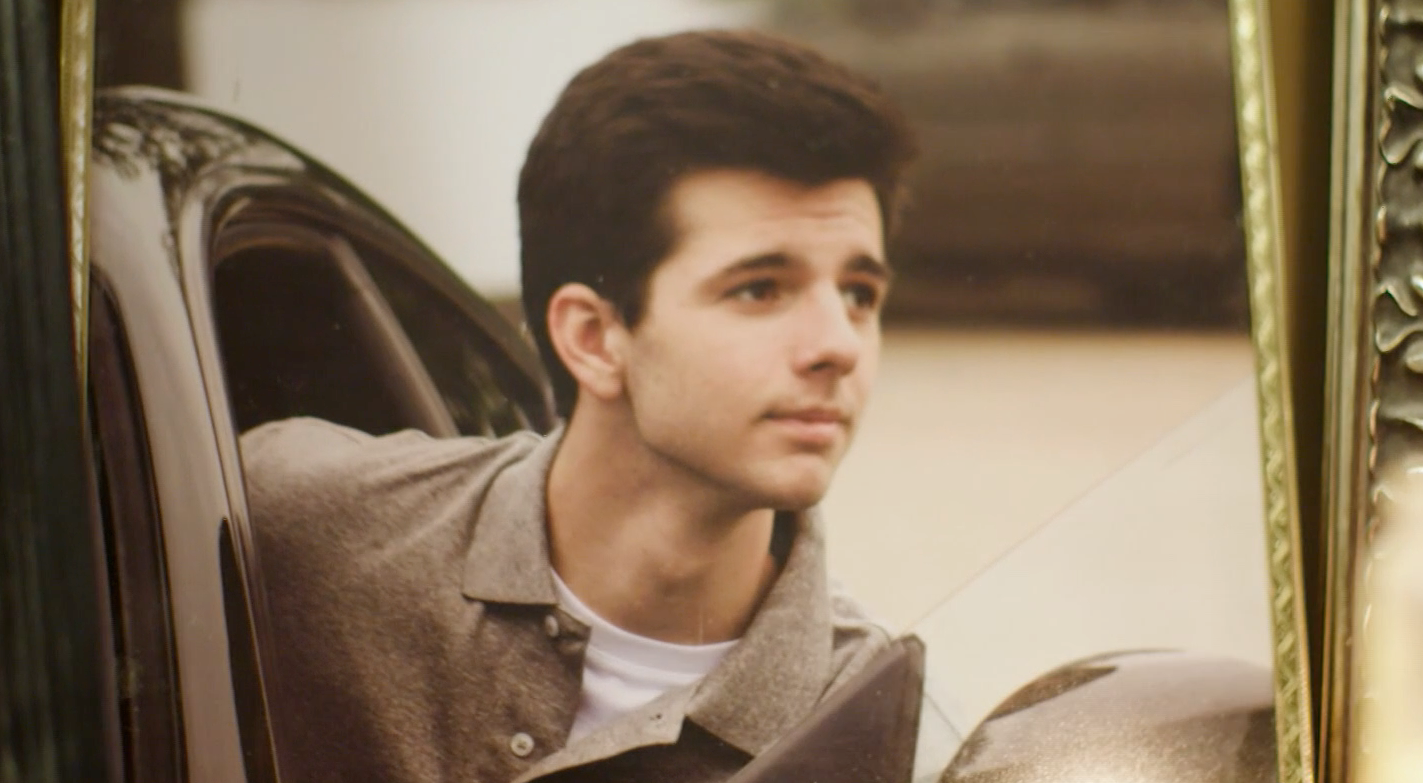

Parents sue Facebook, Instagram and Snapchat claiming they caused teenage son’s suicide through ‘addictive design’

The family of Christopher ‘CJ’ Dawley allege that their son developed a social media addiction that led to his death

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.The family of a Wisconsin teenager who died by suicide in 2015 have sued the parent companies of Facebook, Instagram and Snapchat, claiming the companies sparked his mental crisis with their addictive products.

Donna Dawley, the mother of Christopher "CJ" Dawley, filed a lawsuit against Meta Platforms and Snap in the federal court circuit of Wisconsin last week, alleging wrongful death.

The suit was filed with the help of the Social Media Victims Law Center (SMVLC), a specialist law firm founded to force social media companies to change their design practices by imposing financial penalties on them.

“Congressional testimony has shown that both Meta Platforms and Snapchat were aware of the addictive nature of their products and failed to protect minors in the name of more clicks and additional revenue," said Matthew P Bergman, the firm's founder.

"We are calling on the parent companies of Facebook, Instagram and Snapchat to prioritize the health and wellness of its users by implementing safeguards to protect minors from the danger of cyberbullying and sexual exploitation that run rampant on their platforms."

A spokesperson for Meta, which owns Facebook and Instagram, declined to comment directly on the lawsuit, but outlined a range of steps the company has taken to safeguard users' mental health.

A spokesperson for Snap said: "While we can't comment on active litigation, our hearts go out to any family who has lost a loved one to suicide.

“We intentionally built Snapchat differently than traditional social media platforms to be a place for people to connect with their real friends, and offer in-app mental health resources, including on suicide prevention for Snapchatters in need.

“Nothing is more important than the safety and wellbeing of our community and we are constantly exploring additional ways we can support Snapchatters."

Christopher Dawley was 17 years old when he killed himself in 2015, after allegedly developing a social media addiction that kept him awake "at all hours of the night", increasingly "obsessed with his body image", and often exchanging explicit photos with other users.

The lawsuit describes him as an active churchgoer, golfer, baseball player and football quarterback who began using all three apps around 2012, when he was 15. Though he "never showed outward signs of depression or mental injury", he "never left his smartphone", which he was allegedly still holding when his body was found.

"This product liability action seeks to hold defendants’ products responsible for causing and contributing to burgeoning mental health crisis perpetrated upon the children and teenagers in the United States by defendants and, specifically, for the death by suicide of Christopher J Dawley, caused by his addictive use of defendants’ unreasonably dangerous and defective social media products," the lawsuit says.

"Defendants’ own research also points to the use of [their] social media products as a cause of increased depression, suicidal ideation, sleep deprivation, and other, serious harms.... moreover, defendants have invested billions of dollars to intentionally design and develop their products to encourage, enable, and push content to teens and children that defendants know to be problematic and highly detrimental to their minor users’ mental health."

Many of its arguments about Meta's apps are based on the release of the so-called Facebook Papers and the testimony of their leaker Frances Haugen before Congress last October.

In response to an inquiry from The Independent, a Meta spokesperson laid out the company's rules against graphic suicide and self-harm content, its attempts to prevent allowed but potentially detrimental content from being recommended to users by its algorithms, and its suicide prevention programme that automatically detects signs of suicidal intent and notifies emergency services about the most dangerous cases.

This story was updated at 6.22pm on Wednesday20 April 2022 to add a statement from Snap.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments