If a brain can be caught lying, should we admit that evidence to court? Here's what legal experts think

Using mind reading technologies in court could become common practice

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.A man is charged with stealing a very distinctive blue diamond. The man claims never to have seen the diamond before. An expert is called to testify whether the brain responses exhibited by this man indicate he has seen the diamond before. The question is – should this information be used in court?

Courts are reluctant to admit evidence where there is considerable debate over the interpretation of scientific findings. But a recent study from researchers in the US has noted that the accuracy of such “mind reading” technology is improving.

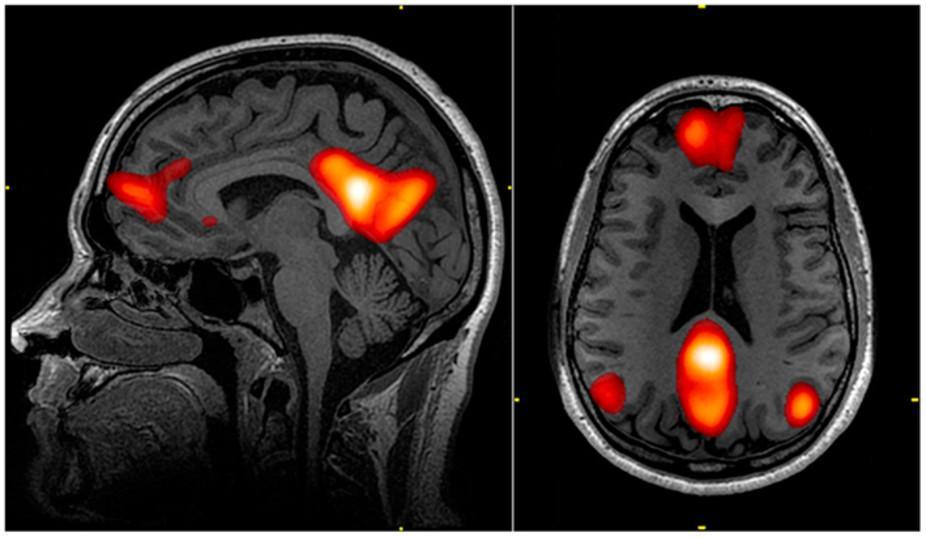

There are various methods of detecting false statements or concealed knowledge, which vary greatly. For example, traditional “lie detection” relies on measuring physiological reactions such as heart rate, blood pressure, pupil dilation and skin sweat response to direct questions, such as “did you kill your wife?” Alternatively, a functional magnetic resonance imaging (fMRI) approach uses brain scans to identify a brain signature for lying.

However, the technology considered by the US researchers, known as “brain fingerprinting”, “guilty knowledge tests” or “concealed information tests”, differs from standard lie detection because it claims to reveal the fingerprint of knowledge stored in the brain. For example, in the case of the hypothetical blue diamond, knowledge of what type of diamond was stolen, where it was stolen, and what type of tools were used to effect the theft.

This technique gathers electrical signals within the brain through the scalp by electroencephalography (EEG), signals which indicate brain responses. Known as the P300 signal, those responses to questions or visual stimuli are assessed for signs that the individual recognises certain pieces of information. The process includes some questions that are neutral in content and used as controls, while others probe for knowledge of facts related to the offence.

The P300 response typically occurs some 300 to 800 milliseconds after the stimulus, and it is said that those tested will react to the stimulus before they are able to conceal their response. If the probes sufficiently narrow the focus to knowledge that only the perpetrator of the crime could possess, then the test is said to be “accurate” in revealing this concealed knowledge. Proponents of the use of this technology argue that this gives much stronger evidence than is possible to get through human assessment.

Assuming this technology might be capable of showing that someone has hidden knowledge of events relevant to a crime, should we be concerned about its use?

Potential for prejudice

Evidence of this sort has not yet been accepted by the English courts, and possibly never will be. But similar evidence has been admitted in other jurisdictions, including India.

In the Indian case of Aditi Sharma, the court heard evidence that her brain responses implicated her in her former fiancé’s murder. After investigators read statements related and unrelated to the offence, they claimed her responses indicated experiential knowledge of planning to poison him with arsenic, and of buying arsenic with which to carry out the murder. The case generated much discussion, and while she was initially convicted, this was later overturned.

However, the Indian Supreme Court has not ruled out the possibility of such evidence being used if the person being tested freely consents. We should not forget that people may knowingly conceal knowledge of facts relevant to a crime for all sorts of reasons, such as protecting other people or hiding illicit relationships. These reasons for hiding knowledge may have nothing to do with the crime. You could have knowledge relevant to a crime but be totally innocent of that crime. The test is for knowledge, not for guilt.

Context is key

The US researchers looked at whether brain-based evidence might unduly influence juries and prejudice the fair outcome of trials. They found the concerns that neuroscientific evidence may adversely influence trials could be overstated. In their experiment, mock jurors were influenced by the existence of brain based evidence, whether it indicated guilty knowledge or the absence of it. But the strength of other evidence, such as motive or opportunity, weighed more heavily in the hypothetical jurors’ minds.

This is not surprising, as our case-based research demonstrates the importance of the context in which neuroscientific evidence is introduced in court. It could help support a case, but the success is dependent on the strength of all the evidence combined. In no case was the use of neuroscientific evidence alone determinative of the outcome, though in several it was highly significant.

Memory detection technologies are improving, but even if they are “accurate” (however we choose to define that term) it does not automatically mean they will or should be allowed in court. Society, legislators and the courts are going to have to decide whether our memories should be allowed to remain private or whether the needs of justice trump privacy considerations. Our innermost thoughts have always been viewed as private; are we ready to surrender them to law enforcement agencies?

Lisa Claydon is a senior lecturer in law and Paul Catley is the head of law school at The Open University. This article was originally published on The Conversation (www.theconversation.com)

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments