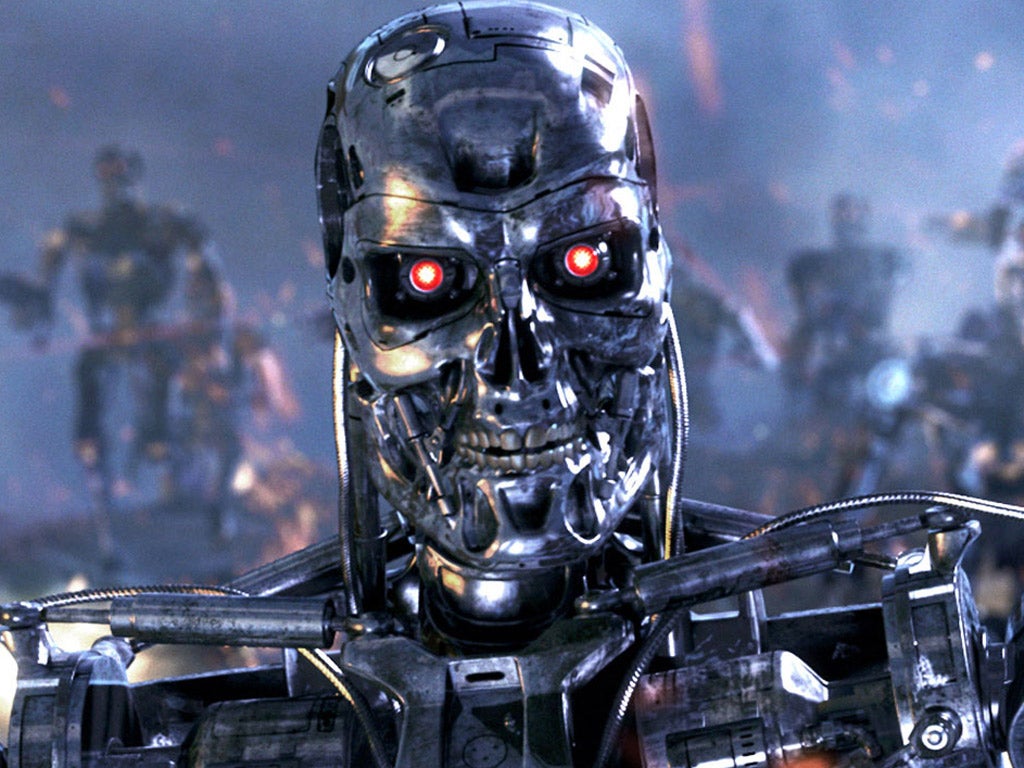

'Killer robots' which are able to identify and kill targets without human input should be banned, UN urged

Human rights investigator Christof Heyns to lead calls against lethal robotic weapons

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.The UN Human Rights Council will be warned of the ethical dangers posed by so called “killer robots”; autonomous machines able to identify and kill targets without human input.

Tonight’s talk in Geneva will include an address by Christof Heyns - the UN special rapporteur on extrajudicial, summary or arbitrary executions for the Office of the High Commissioner for Human Rights - who will call for a moratorium on such technology to prevent their deployment on the battlefield.

Heyns, has submitted a 22-page report on “lethal autonomous robots”, says that the deployment of such robots “may be unacceptable because no adequate system of legal accountability can be devised” and because “robots should not have the power of life and death over human beings.”

Heyns, a South African law professor who fulfils his role independently for the UN, has highlighted in particular the US’s counter-terrorism operations using remotely-piloted drones to target individuals in hotspots such as Pakistan and Yemen.

Although fully autonomous weapons have not yet been developed Heyns says that “there is reason to believe that states will, inter alia, seek to use lethal autonomous robotics for targeted killing.”

Heyns points to the history of drone-deployment as evidence that countries could escalate to using autonomous robots. Drones were originally intended only for surveillance purposes and “offensive use was ruled out because of the anticipated adverse consequences.”

These considerations were ignored once it had been established that weaponised drones could provide “advantage over an adversary”.

The report highlights that despite recent advances, current military technology is still “inadequate” and unable “to understand context.” This makes it difficult for robots to establish whether “someone is wounded and hors de combat” or “in the process of surrendering.”

Heyns added that “A further concern relates to the ability of robots to distinguish legal from illegal orders.”

“The UN report makes it abundantly clear that we need to put the brakes on fully autonomous weapons, or civilians will pay the price in the future,” said Steve Goose, arms director at Human Rights Watch. “The US and every other country should endorse and carry out the UN call to stop any plans for killer robots in their tracks.”

Barack Obama defended drone use in a speech last Friday, arguing that the use of UAVs was not only legal but the most efficient way of targeting terrorists. He referenced the “heartbreaking” civilian casualties caused by their use, but said that armed intervention was more dangerous – both for civilians and soldiers.

Examples of autonomous weapons systems already in use include the US Navy’s Phalanx gun system that automatically engages incoming threats and the Israeli Harpy drone that automatically attacks radar emitters.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

0Comments