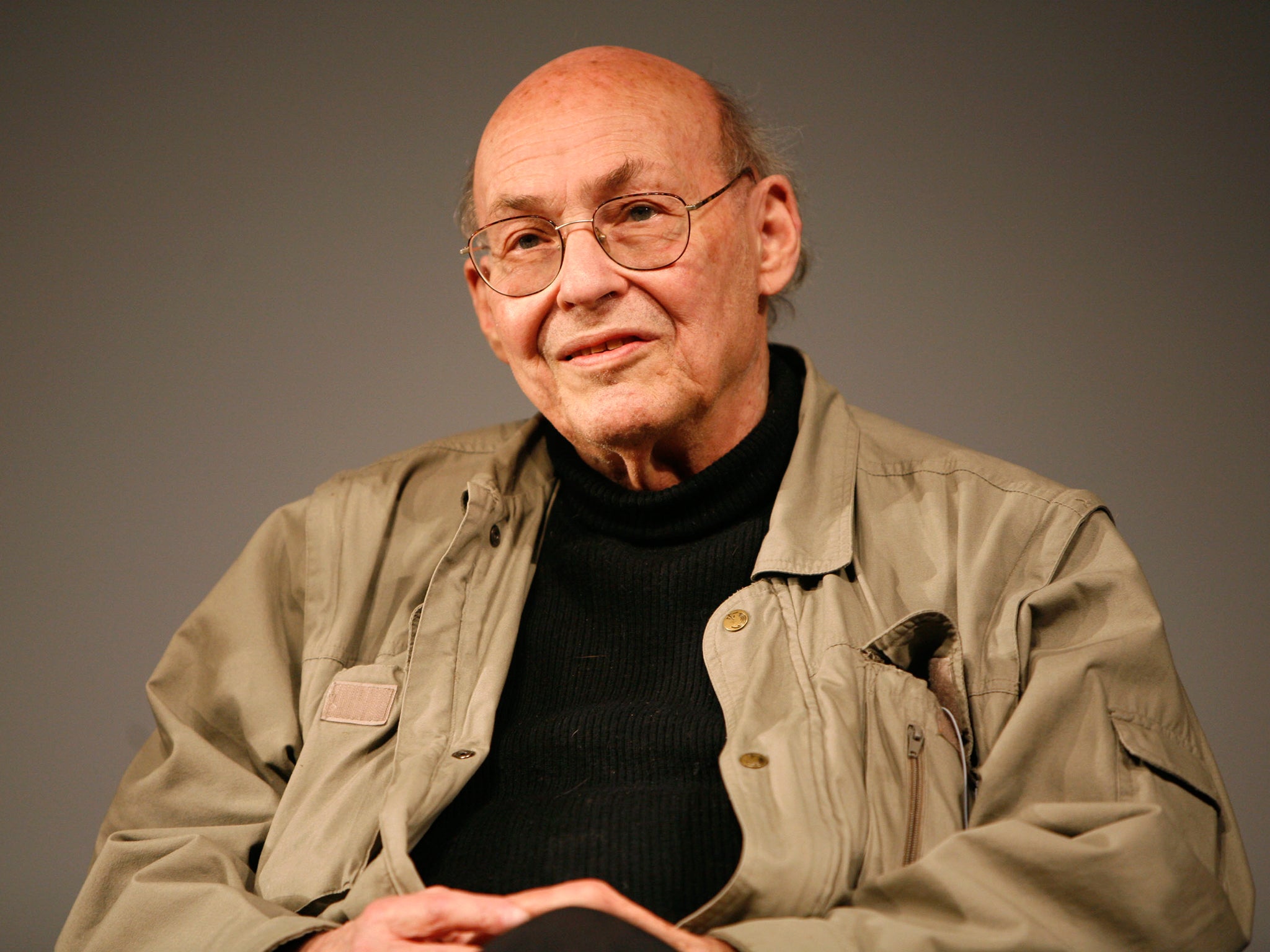

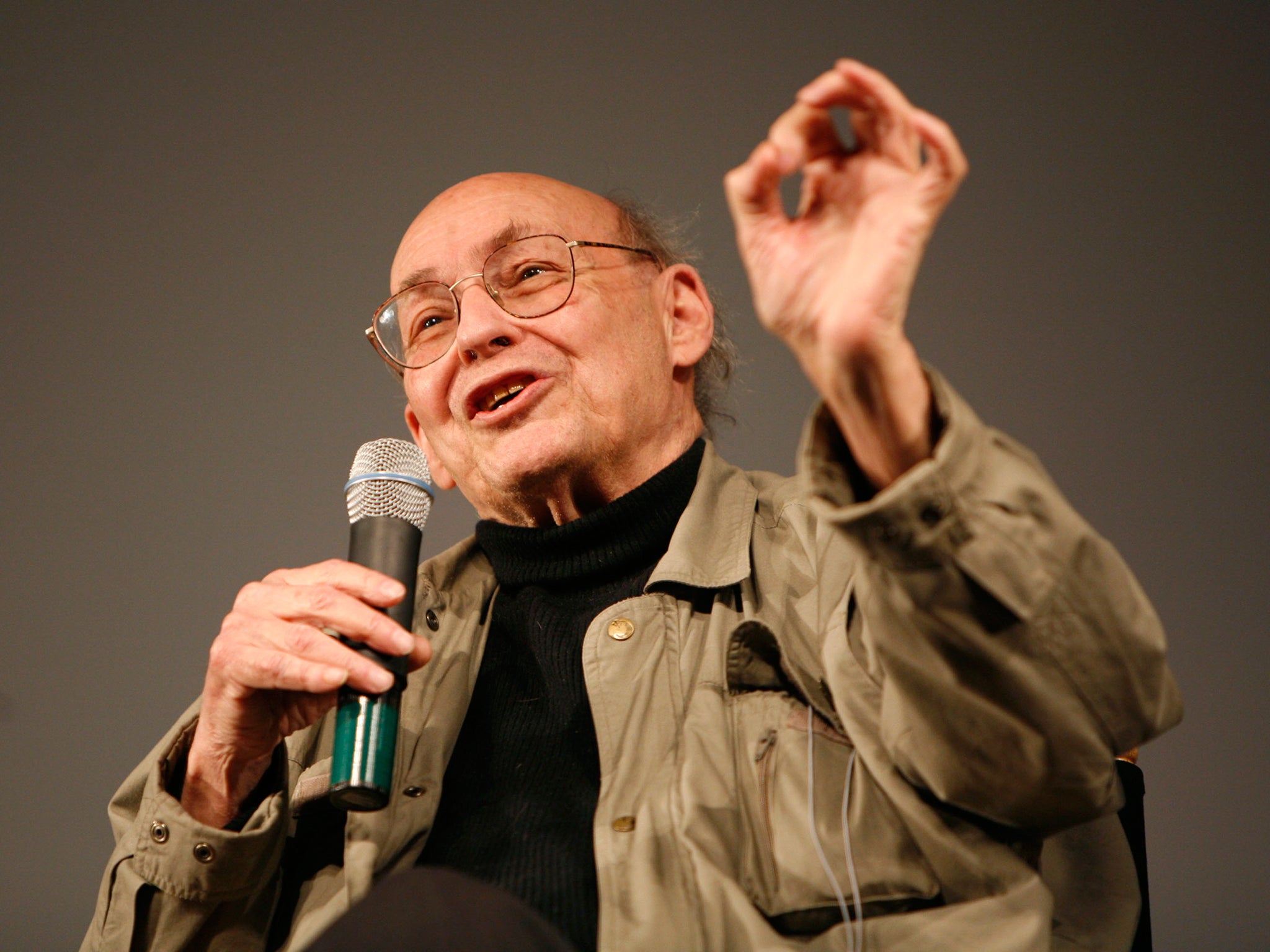

Marvin Minsky dead: 'The world has lost one of its greatest minds in science'

Legendary cognitive scientist who pioneered artificial intelligence thrived even when the field suffered hard times

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.Marvin Minsky, a legendary cognitive scientist who pioneered the field of artificial intelligence, died Sunday at the age of 88. His death was announced by Nicholas Negroponte, founder of the MIT Media Lab, who distributed an email to his colleagues:

With great great sadness, I have to report that Marvin Minsky died last night. The world has lost one of its greatest minds in science. As a founding faculty member of the Media Lab he brought equal measures of humour and deep thinking, always seeing the world differently. He taught us that the difficult is often easy, but the easy can be really hard.

In 1956, when the very idea of a computer was only a couple of decades old, Minsky attended a two-month symposium at Dartmouth that is considered the founding event in the field of artificial intelligence. His 1960 paper, "Steps Toward Artificial Intelligence," laid out many of the routes that researchers would take in the decades to come. He founded the Artificial Intelligence lab at MIT, and wrote seminal books — including "The Society of Mind” and “The Emotion Machine” — that colleagues consider essential to understanding the challenges in creating machine intelligence.

You get a sense of his storied and varied career from his home page at MIT:

In 1951 he built the SNARC, the first neural network simulator. His other inventions include mechanical arms, hands and other robotic devices, the Confocal Scanning Microscope, the “Muse” synthesizer for musical variations (with E. Fredkin), and one of the first LOGO “turtles”. A member of the NAS, NAE and Argentine NAS, he has received the ACM Turing Award, the MIT Killian Award, the Japan Prize, the IJCAI Research Excellence Award, the Rank Prize and the Robert Wood Prize for Optoelectronics, and the Benjamin Franklin Medal.

One of his former students, Patrick Winston, now a professor at M.I.T., wrote a brief tribute to his friend and mentor:

Many years ago, when I was a student casting about for what I wanted to do, I wandered into one of Marvin's classes. Magic happened. I was awed and inspired. I left that class saying to myself, “I want to do what he does.”

M.I.T.'s obituary of Minsky explains some of the professor's critical insights into the challenge facing anyone trying to replicate or in some way match human intelligence within the constraints of a machine:

Minsky viewed the brain as a machine whose functioning can be studied and replicated in a computer — which would teach us, in turn, to better understand the human brain and higher-level mental functions: How might we endow machines with common sense — the knowledge humans acquire every day through experience? How, for example, do we teach a sophisticated computer that to drag an object on a string, you need to pull, not push — a concept easily mastered by a two-year-old child?

His field went through some hard times, but Minsky thrived. Although he was an inventor, his great contributions were theoretical insights into how the human mind operates.

In a letter nominating Minsky for an award, Prof. Winston described a core concept in Minsky's book "The Society of Mind": "[I]ntelligence emerges from the cooperative behavior of myriad little agents, no one of which is intelligent by itself." If a single word could encapsulate Minsky's professional career, Winston said in a phone interview Tuesday, it would be "multiplicities."

The word "intelligence," Minsky believed, was a "suitcase word," Winston said, because "you can stuff a lot of ideas into it.”

His colleagues knew Minsky as a man who was strikingly clever in conversation, with an ability to anticipate what others are thinking -- and then conjure up an even more intriguing variation on those thoughts.

Journalist Joel Garreau on Tuesday recalled meeting Minsky in 2004 at a conference in Boston on the future evolution of the human race: "What a character! Hawaiian shirt, smile as wide as a frog’s, waving his hands over his head, a telescope always in his pocket, a bag full of tools on his belt including what he said was a cutting laser, and a belt woven out of 8,000-pound-test Kevlar which he said he could unravel if he ever needed to pull his car out of a ravine."

Minsky and his wife Gloria, a pediatrician, enjoyed a partnership that began with their marriage in 1952. Gloria recalled her first conversation with Marvin: “He said he wanted to know about how the brain worked. I thought he is either very wise or very dumb. Fortunately it turned out to be the former.”

Their home became a repository for all manner of artifacts and icons. The place could easily merit status as a national historical site. They welcomed a Post reporter into their home last spring.

They showed me the bongos that physicist Richard Feynman liked to play when he visited. Looming over the bongos was 1950s-vintage robot, which was literally straight out of the imagination of novelist Isaac Asimov — he was another pal who would drop in for the Minsky parties back in the day. There was a trapeze hanging over the middle of the room, and over to one side there was a vintage jukebox. Their friends included science-fiction writers Arthur C. Clarke and Robert Heinlein and filmmaker Stanley Kubrick.

As a young scientist, Marvin Minsky lunched with Albert Einstein but couldn’t understand him because of his German accent. He had many conversations with the computer genius John Von Neumann, of whom he said:

“He always welcomed me, and we’d start taking about something, automata theory, or computation theory. The phone would ring every now and then and he’d pick it up and say, several times, ‘I’m sorry, but I never discuss non-technical matters.’ I remember thinking, someday I’ll do that. And I don’t think I ever did.”

Minsky said it was Alan Turing who brought respectability to the idea that machines could someday think.

“There were science-fiction people who made similar predictions, but no one took them seriously because their machines became intelligent by magic. Whereas Turing explained how the machines would work,” he said.

There were institutions back in the day that were eager to invest in intelligent machines.

“The 1960s seems like a long time ago, but this miracle happened in which some little pocket of the U.S. naval research organization decided it would support research in artificial intelligence and did in a very autonomous way. Somebody would come around every couple of years and ask if we had enough money,” he said — and flashed an impish smile.

But money wasn’t enough.

“If you look at the big projects, they didn’t have any particular goals,” he said. “IBM had big staffs doing silly things.”

But what about IBM’s much-hyped Watson (cue the commercial with Bob Dylan)? Isn’t that artificial intelligence?

“I wouldn’t call it anything. An ad hoc question-answering machine.”

Was he disappointed at the progress so far?

“Yes. It’s interesting how few people understood what steps you’d have to go through. They aimed right for the top and they wasted everyone’s time,” he said.

Are machines going to become smarter than human beings, and if so, is that a good thing?

“Well, they’ll certainly become faster. And there’s so many stories of how things could go bad, but I don’t see any way of taking them seriously because it’s pretty hard to see why anybody would install them on a large scale without a lot of testing.”

© Washington Post

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments