How women in science have been systematically robbed of their ideas

Anyone whoever doubted the harsh realities of patriarchy need only look upon the history of science, writes Andy Martin

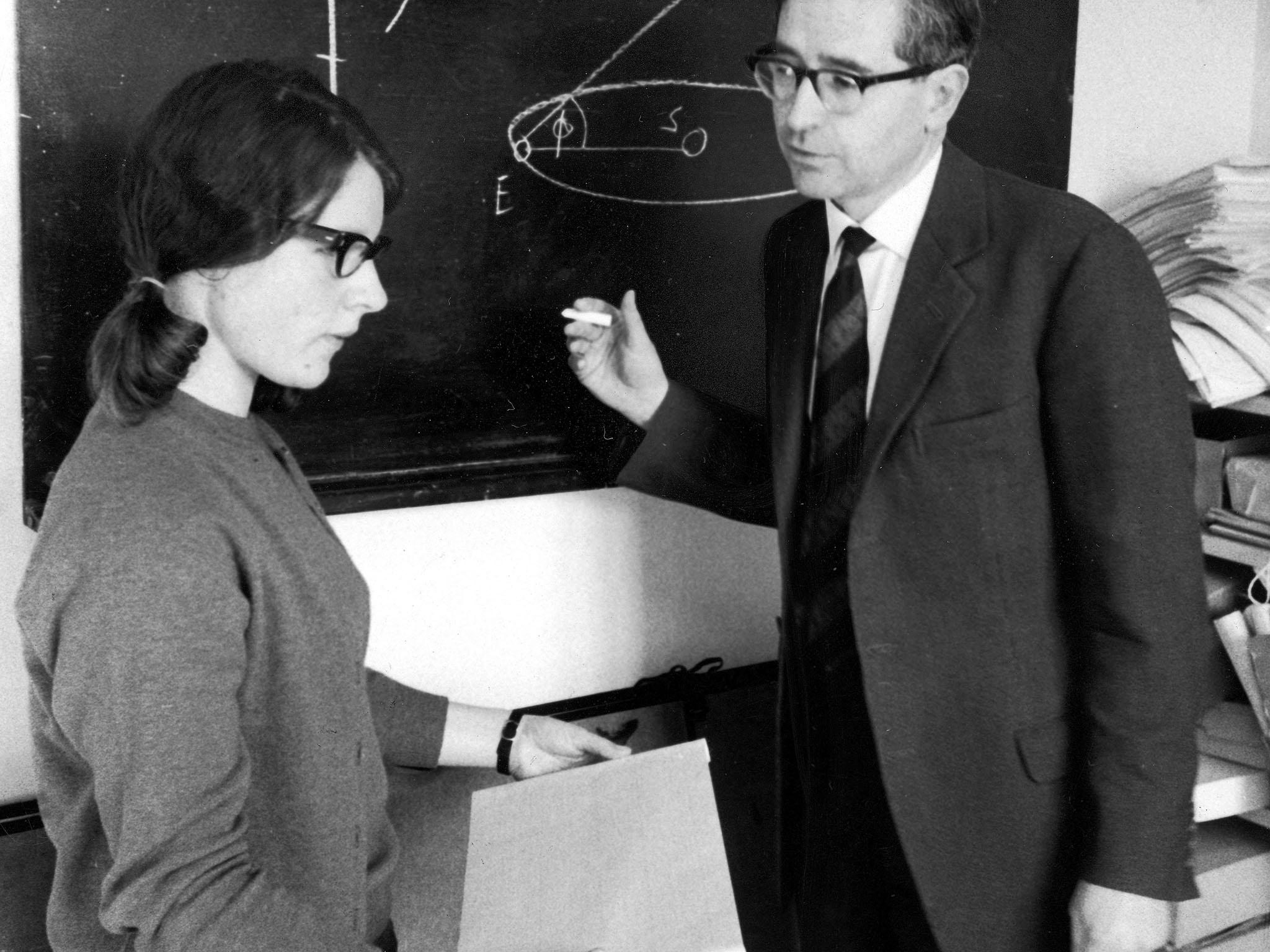

I’m not saying anybody stole anybody else’s Nobel prize. Nothing was stolen. At the same time, it feels as if somebody was robbed. But you decide. The facts are clear enough. Back in 1967, Jocelyn Bell was a 20-something graduate student at New Hall (now Murray Edwards College) in Cambridge, studying astrophysics, doing a PhD in radio astronomy. In fact she was one of the pioneers of radio astronomy in Cambridge.

It wasn’t all theory back then. You still had to build the “telescopes”, which looked more like antennae, sprouting up out of the countryside. Bell got her hands dirty, banging a thousand poles into the fields north of Cambridge, and stringing 120 miles of wire between them. From the point of view of outer space, it must have looked like acres of fine mesh laid down like dew on the green and pleasant lands. What Bell and others were trying to catch in their net was electromagnetic manna from heaven.

Bell had the job of analysing the data. Sounds easy now, but I know how hard it was back then (I have a twin brother who really is a rocket scientist, wrote his PhD on X-ray astronomy, and used to curse the roomfuls of paper he regularly had to scour in search of elusive gems of hard information). Bell was specifically looking for fluctuations in radio emissions, known rather lyrically as “interplanetary scintillations”. She had to keep an eye on four three-track pen recorders, which collectively churned out 96-feet of printouts every single day.

In the course of scanning reams of paper for minuscule anomalies, she was the first to spot what she initially called “unclassifiable scruff”. She soon realised that what she was looking at was a regular signal, like a pulse, emanating from an area of space known as “right ascension 1919” every 1.33 seconds. Her first thought, naturally enough, was: aliens. This was ET phoning home. But Bell didn’t think the hypothesis of an extraterrestrial civilisation would go down all that well in a doctoral thesis.

“I went home that night very cross,” she wrote later. “Here was I trying to get a PhD out of a new technique, and some silly lot of little green men had to choose my aerial and my frequency to communicate with us.” Which explains why the source became known early on as “LGM” (Little Green Men).

Bell spent months eliminating the usual suspects from her enquiries. Nothing to do with the moon, passing satellites, or any terrestrial source. She finally worked out that this celestial Morse code was most likely coming from an ancient star that had shrunk down to a few miles across and was spinning around at tremendous speed and flashing out signals like a lighthouse. Punctually and reliably.

This type of star was a “neutron star”, the collapsed remnant of a supernova, so dense that a teaspoonful weighs as much as a decent-sized planet. And the signals themselves became known as “pulsars” (combining “quasar” and “pulse”). It was a major intellectual breakthrough that would lead to more intense theoretical speculation about the formation and structure of the universe. And Jocelyn Bell was at the forefront of the research, every step of the way. So naturally, in 1974, they gave the Nobel prize for discovering pulsars to her supervisor. A guy (who had initially been sceptical about Bell’s findings). Classic. Starlight robbery.

I guess you could say it wasn’t really his fault. He was just the beneficiary of a pervasive bias. On the other hand, to my way of thinking, he could always have turned the prize down, like Jean-Paul Sartre, in protest at the system. But he didn’t. I’m not calling it unethical, only very, very uncool.

You could probably scan the universe (like Jocelyn Bell – now Jocelyn Bell Burnell, and chancellor of the University of Dundee) and find countless similar examples. But while we are talking about Cambridge, let’s just stay there and consider the well-known case of the discovery of the structure of DNA. Let us go to the Eagle pub, in Bene’t Street (yes, the apostrophe really does belong there, weirdly enough, since the name is a contraction of Benedict).

This is where, according to a blue plaque on the wall, one day in 1953, Francis Crick and James Watson announced that they had finally come up with “the secret of life”. Drinks all round. And make mine a Nobel please. Which seems entirely fair, until you stop and consider that the structure of the “double helix” was first pointed out by a woman, Rosalind Franklin, based in London, not in the Eagle pub.

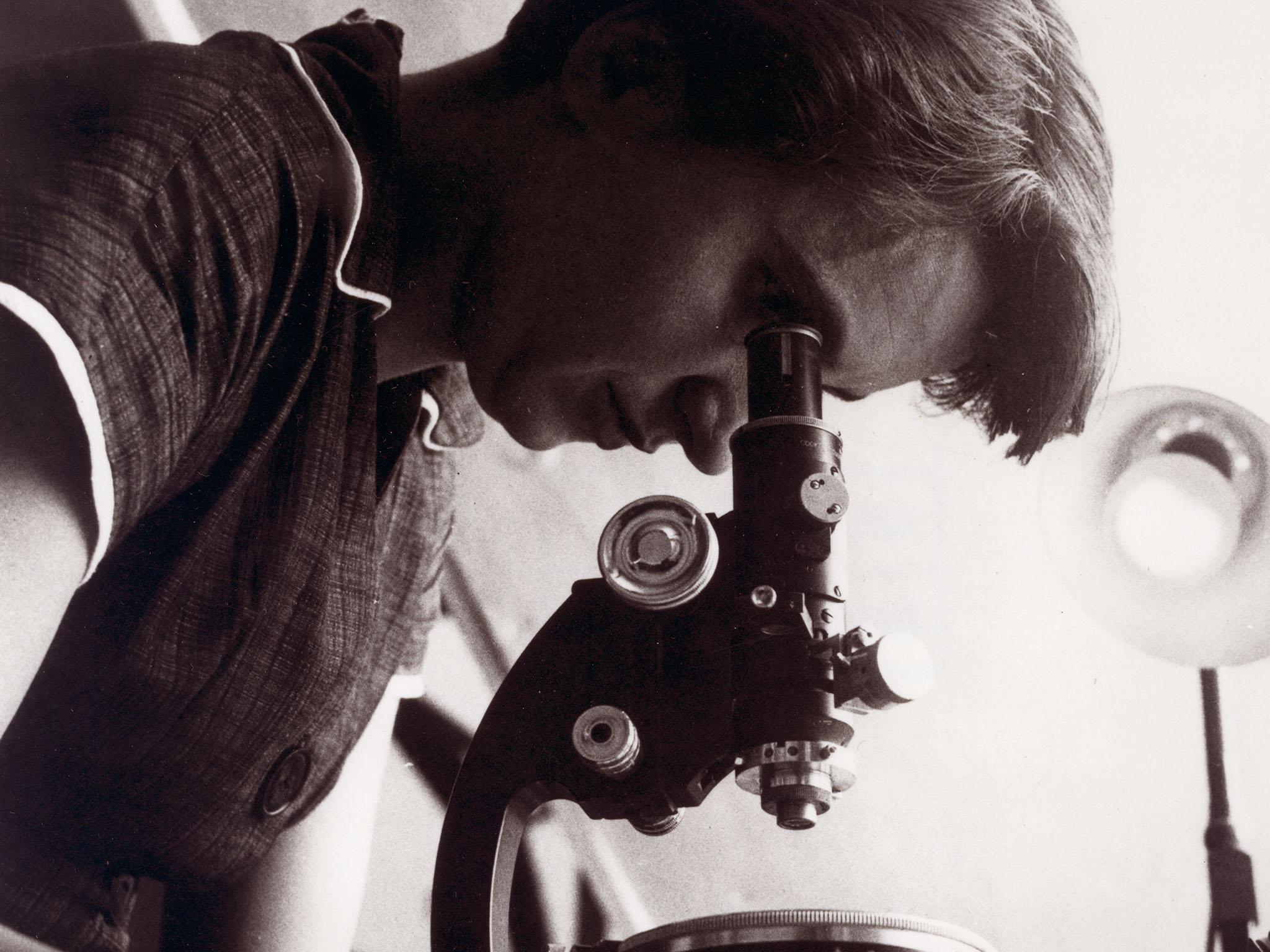

Her speciality was X-ray crystallography. She graduated from Cambridge and spent the war years examining the atomic structure of a piece of coal, working with the British Coal Utilisation Research Association. It was while she was at King’s College London that she started work on the X-ray diffraction analysis of deoxyribonucleic acid (DNA) and ribonucleic acid (the less famous RNA). She produced what were in effect photographs of the molecular structure of DNA. Notably the famous “Photo 51” (technically, an X-ray “diffractogram”) which effectively drew a diagram of the double helix.

Our two dashing male researchers then stuck their flag on top of her work. Unbeknownst to Franklin, the photograph had mysteriously made its way to Cambridge via Maurice Wilkins (one of her shady “colleagues” in London) and a graduate student. Franklin provided, at the very least, a crucial source of data to the development of the DNA model. But she got cut out of the story.

Her contribution only became known posthumously. She died in 1957, at the age of 37, of ovarian cancer, while still carrying out research into the structure of viruses. But you can’t help but wonder if getting sidelined and airbrushed out of the history of science might have had something to do with her early demise. As if she died of heartbreak or frustration or sheer fury at the inanity of it. Meanwhile Crick and Watson and Wilkins duly got their Nobel (for medicine) in 1962, after she was safely out of the way.

Watson went on, in his book, The Double Helix, to talk down Franklin. As did Wilkins in his autobiography. Her revenge is to have been resurrected on the West End stage in 2015 by Nicole Kidman, in the play Photograph 51.

Alessandro Strumia, an atom-smasher working at CERN in Geneva, recently said that physics was “invented and built by men” (remarks for which he was subsequently suspended). A decade or so ago, Lawrence Summers, then the president of Harvard, argued that women were just not cut out for doing science. To be fair to them, they were only giving vent to a prejudice that is built into the very DNA of scientific activity. Nature has always been seen as woman (“Mother Nature”), and science as man, exploring and penetrating and dominating and overcoming.

The Royal Society was a men-only club until as recently as 1945. Women were occasionally allowed to tag along and join in, like interns, only to have their work ripped off. For anyone who doubts the harsh realities of patriarchy, you only have to look upon the history of science – and despair. Science has been as irrational and prejudiced against women as the religion it has so often denounced.

Ironically enough, given that a woman was integral to the analysis of DNA, the heavy hand of genetics, like a more scientifically-minded Trump, has long been prodding, poking, groping women and pinning them down biologically. Their brains are different! No wonder they can’t do science. Female sexual difference is invariably constructed in terms of a lack, a physiological and cognitive deficit. The equally corrosive idea, arising out of theories of autism, that women are better at “empathy”, is another way of excluding them from “systemising” (which is another way of talking about thinking).

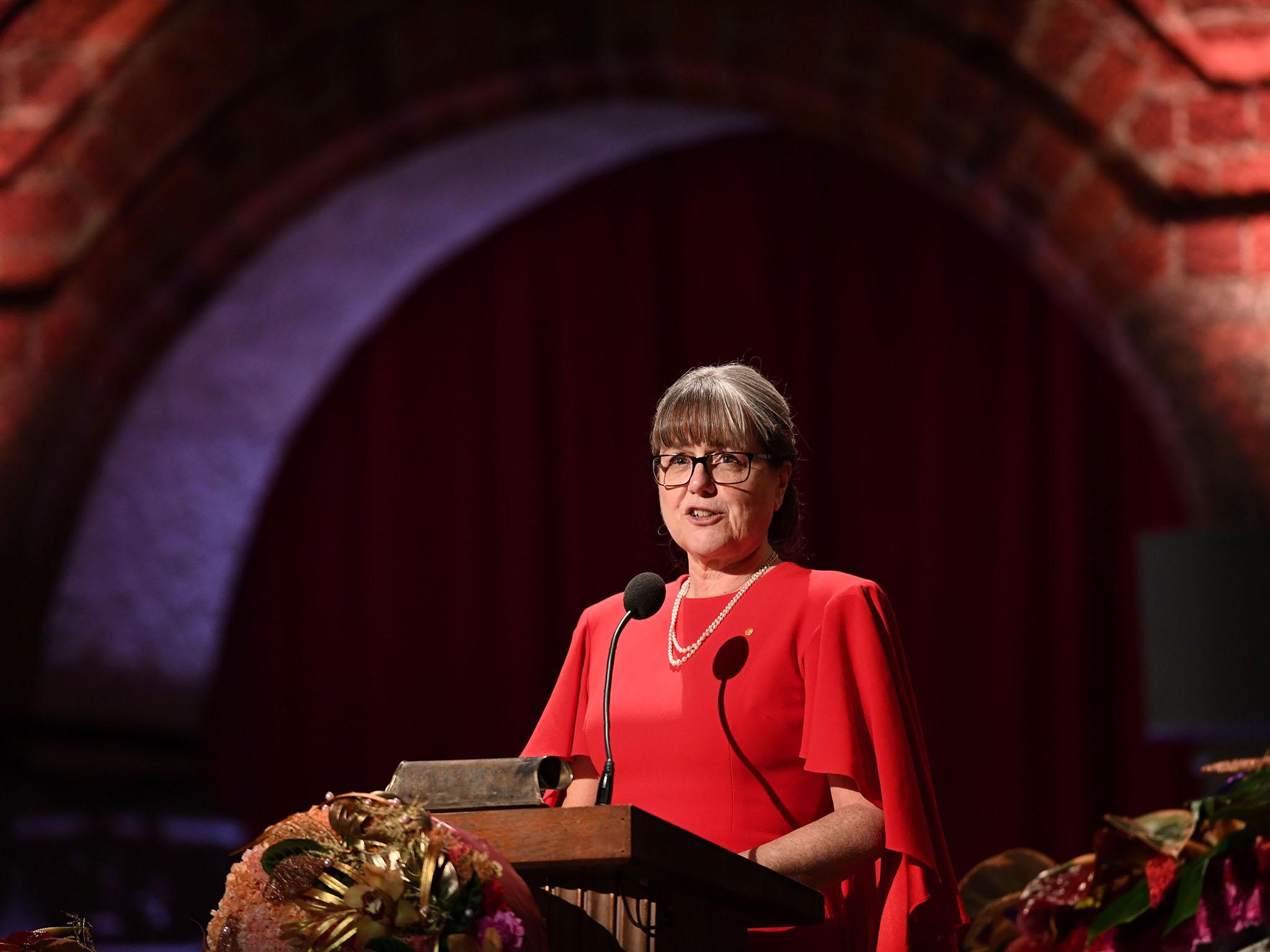

But now it’s possible for a woman to win the Nobel prize for physics (take a bow, Donna Strickland, only the second since Marie Curie). Perhaps there is even a chance of a woman’s face appearing on the yet-to-be-printed £50 note (I just pray this one is vegetarian). One of the major contenders has to be Ada Lovelace. She is one of the rare women scientists of the modern era to have received her due.

Lovelace was born Byron – the daughter of Lord Byron, the great Romantic poet once described as “mad, bad, and dangerous to know”. She became the Countess of Lovelace. But she also worked with Charles Babbage on his early prototype of the computer, then known as the “Analytical Engine”. Babbage saw it (as others before him had done) as a mechanical calculator, capable of doing sums at speed.

Lovelace was the first to spot its potential as an alternative brain, able to juggle symbols and process information. She is credited with coming up with the first “algorithm” or computer program more than a century in advance of Alan Turing. Since the rise of personal computing in the second half of the 20th century, Lovelace has become a poster girl of IT (and last year received the accolade of a belated obituary in The New York Times).

So, to state what ought to be obvious, women are perfectly capable of being scientists. The very word “scientist” is a recent invention, dating back to the early 19th century, to a division of labour, but also to the growth in our obsession with labelling everything, the taxonomic imperative. But women themselves have suffered above all from taxonomy, the sustained effort to put them in their place and stick a label on them and pin them to the wall like butterflies.

They have been the objects or subjects of science rather than its practitioners and exponents. Just as science in the 19th century propagated the ideas of “phrenology”, determining character and psychology on the basis of the shape of the head, so too “science” has tried to lay down the destiny of women on the basis of the contours of their bodies.

So much of what passes for science comes to be seen as absurd or fanciful or pseudo-science. In different times and places, science has been racist.

And science has undoubtedly been sexist, even structurally sexist. Just as language itself is. If I had a daughter, I would tell her (if I can still use that dubious object pronoun) to go and be a scientist if you want. Or a poet. Or a rock star. Or a captain of industry. Go and be a saint or a sinner, a hero or a coward, be brilliant or stupid, be beautiful or ugly, be a mad hedonist or a buttoned-up puritan, be straight or gay, be a good mother, a bad mother, or utterly indifferent towards motherhood. Or all of the above. Or none.

I wouldn’t go ahead and advise her to be a hunter or a gatherer, a murderer or a tyrant, a detective or a criminal, Beryl the Peril or Joan of Arc. In fact, I wouldn’t offer her any advice at all (I have two sons and I have offered them a similar lack of guidance, as I think they will confirm). I have no wisdom, no crystal ball, no power of determination. All of these things are possible. She is unlimited. Just as science is. A scientist, as Simone de Beauvoir said of a woman, “is not born but made”.

Sometimes it is important to acknowledge our ignorance, even as scientists. No one knows what science is any more. Lovelace spoke of herself as doing “poetical science”. We used to go about trying to define a scientific “method”. It was all to do with “verification” or, as Karl Popper had it, “falsifiability”. It if couldn’t be falsified (or proven to be wrong) then it wasn’t science. But the reality is that science is highly speculative. It used to be called “natural philosophy” and it still is that. Science is not just black holes and big bangs (yes, the plural, a potential plethora of singularities), it is also string theory and parallel universes, those exotic theories that are not susceptible to any empirical observation, but may or may not seem plausible at any given time.

I notice even Spider-Man now speaks of a multiverse or “spider-verse”. There is no “paradigm” (in Thomas Kuhn’s word) that is not subject to a revolution. Personally, I have always been a devotee of the “music of the spheres” (or “musica universalis”), the grand, overarching idea that the revolution of the planets generates a celestial harmony or “music” of profound beauty. It has made a bit of a comeback in the shape of string theory (which maintains that the universe is made out of extremely small pieces of one-dimensional spaghetti vibrating at high speed). Lovelace reckoned that computers would be good at making music too: “The engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.”

One thing I do know about science is that it is a conspiracy. A collective undertaking. Try doing science on your own. You can’t, can you? You have to have other people around to compare notes with. You can’t bang in all those poles to build a radio telescope on your own. As Newton said, we can only see as far as we do because we are standing on the shoulders of giants. Everyone is standing on someone else’s shoulders. It’s not about great individuals. Science has been, for part of its history, a conspiracy against women. Perhaps it arose (as Foucault maintained) as a system of control. But at its best it is collaborative and cuts across time and frontiers and genders.

Andy Martin is the author of ‘Reacher Said Nothing: Lee Child and the Making of Make Me’. He teaches at the University of Cambridge

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments