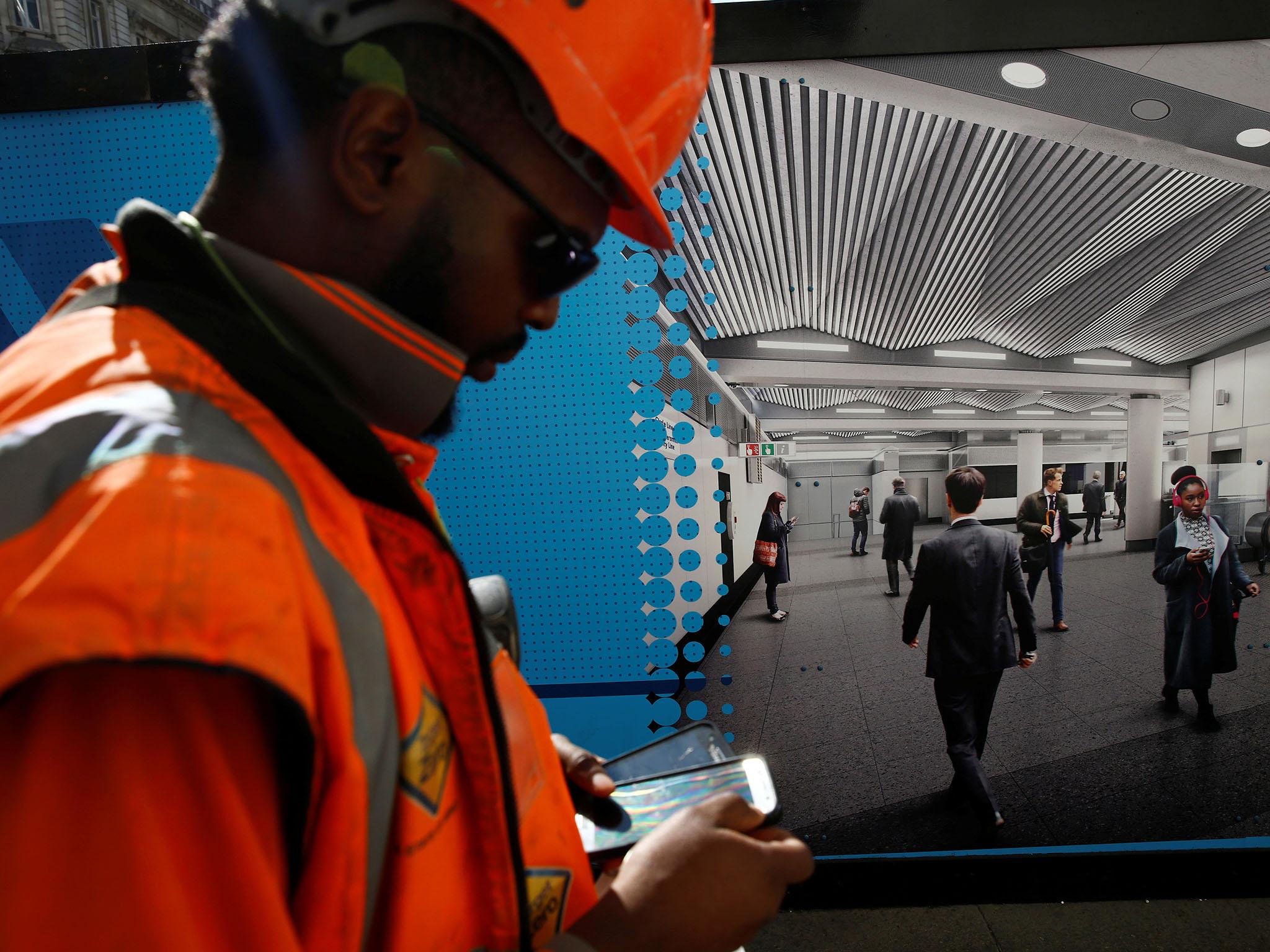

Adam Greenfield on emerging technology: 'God forbid that anyone stopped to ask what harm this might do us'

The tech author says we haven't given enough thought as to how these profound shifts affect us psychologically and as a society

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.With both experience in the tech world (he was Nokia’s head of user interface design) and in academia (a senior fellow in the LSE’s urban studies centre, LSE Cities), Adam Greenfield is well placed to analyse the political ramifications of today’s cutting-edge technologies. Below, Mathew Lawrence, senior research fellow at the Institute for Public Policy Research, questions Greenfield about the unrecognised effects that today’s technologies – from smartphones, to 3D printing, AI, to Bitcoin and blockchain – have on 21st century consciousness, and the possibilities they unearth for a better world.

One of the central arguments of your superb new book, Radical Technologies: The Design of Everyday Life (Verso, 2017), is the need to ask what new and emerging technologies do, what inequalities they create or reproduce, and blind spots.

I don’t know if you remember how Steve Jobs originally introduced the concept of the iPhone. He did it in a really clever way. He started by saying: “We’d like to announce a couple of different products today: a touchscreen iPod, a revolutionary mobile phone and a breakthrough internet device.” And then he repeated it a few times, in this sort of incantatory rhythm. After a couple of repetitions, people in the audience started to get it, and that’s when the applause really kicked in. That was the moment they understood for the first time that he was referring to one device that did all those things.

There’s a conscious level at which we purposively engage with a phone, and then there are all of the things that it’s doing in the background, that we tend not to be consciously aware of. So when you’re walking down the street having a conversation on the phone, it’s simultaneously recording your location, plotting that location on a virtual map, comparing it to the locations of venues of interest or other people in your social network, and so on.

Now, some of this is driven by the mechanical necessities of communication – for example, location recording results from your phone establishing “handshake” relationships with cellular base towers. And some of it is simply an artefact of business model, like most of the data analytics. But what’s of most concern to me is that this entire mode of being we enter into when we engage the world via smartphone exerts a remarkable influence over our behaviour, and the interface through which we mediate the world in all its diversity and complexity draws from a relatively circumscribed vocabulary, generated by a remarkably small number of people and institutions. And a consequence of this is that, while they may be doing 10,000 different things for us in the course of a day, our smartphones are all the while reproducing a very distinctive affect, and that affect corresponds to a particular ideology that was developed in a particular place, at a particular time in history, by identifiable individuals and institutions.

I find it extraordinary that there’s been relatively little attention to what that implicit ideology is. I truly cannot imagine life in 2017 without my smartphone or the pleasure, utility and convenience it furnishes me. So we aren’t particularly inclined to go digging into the matter. God forbid we ever thought deeply about it, and were confronted with the idea that it might be better for us if we don’t use this thing. Neither do I think there is a way we can meaningfully opt out at this point. We produce data; we are subjects of data.

This is a useful point of comparison in grasping how challenging it’s going to be to unwire the hold that scarcity has on our psyche. It may simply be that somebody my age – born when I was born, and into certain material and social circumstances – will never be fully at home with unlimited abundance in that regard, even if it is achieved. I don’t like to psychoanalyse people, but it is manifestly the case that the extremely wealthy people I know aren’t happy. Not that I feel particularly sorry for them compared with a lot of other people, of course, but I just don’t think that material abundance itself is the same thing as fulfilment. And it will do all of this whether or not we choose to actively participate.

This is a key theme of your book: that, to enable greater abundance and flourishing, the challenge is not about technical feasibility, but the politics that shape the use of technologies and the institutions we construct. With this in mind, can you explain what blockchain is, and its problematic political appeal?

It almost doesn’t matter what the blockchain actually is or how it works, because it doesn’t do what the popular media, and therefore the popular imagination, understand it to be doing. But at root if someone asserts that a given transaction or document or artefact has a specified provenance, then that assertion can be tested and verified to the satisfaction of all parties, computationally and in a distributed manner, without the need to invoke any centralised source of authority.

The blockchain does this because the people who devised it have a very deeply founded hostility to the state, and in fact to central authority in all its forms. What they wanted to do was establish an alternative to the authority of the state as a guarantor of reliability

It’s all established computationally. But this procedure is still opaque, perhaps more opaque than any process of state, and certainly not accessible to any process of ordinary democratic review. So this is not in any way a trustless architecture, as it is so often claimed to be. It merely asks that we repose trust in a different set of mediating agencies, institutions and processes: ones that are distributed, global, and are in many ways less accountable. And above all this is because the blockchain and its operations are even more poorly understood by most people than the operations of state that they purport to replace.

I don’t find that to be a particularly utopian prospect. In fact, there’s something shoddy and dishonest about the argument. What the truly convinced blockchain ideologues aim to do is drain taxation and revenue away from the state, because – in the words of Grover Norquist – they want to shrink the state to the point that it can be drowned in a bathtub. This is their explicit ambition. Why shouldn’t we take them at their word?

You also probe the algorithmic management of economic life via the application of machine learning techniques to large, unstructured data sets. You argue that this is a huge and unprecedented intervention in people’s lives, despite us lacking knowledge on how crucial decisions made about our everyday existence are reached. How has this come to be and why is it so uninterrogated? Is there any way of democratising the algorithms that are increasingly governing our lives?

In the past, when approaching a bank for credit, there was presumably a loan officer who would have said, “Well, the guy’s got a great credit history, and he’s got references from all of his previous lenders. I chose to take a decision based on those credentials.” Or conversely, “He looked shifty. His credentials were flaky. His paperwork didn’t check out. That’s why I denied him the loan.” And maybe that human loan officer was accountable for such decisions. And there existed – again, in principle – procedures to reverse decisions that didn’t really pass the institution’s or the larger society’s smell test. But today, no one can tell you why your loan application came back the way it did. It could be something like whether or not you’ve chosen to fill out your application in block capitals, which evidently statistically correlates to other behaviours that indicate a higher or a lower propensity to repay your loans. And you’ll never, ever know that. All you’ll have is the result, the decision, and it won’t be subject to review or appeal.

Even the institution that relies on that algorithm won’t be able to say to any particular degree of assurance whether or not your handwriting’s been the triggering factor, or if it was something else in the cascade of decision gates that’s ultimately bound up in the way this kind of network does work in the world.

So the sophistication of these systems is rapidly approaching a point at which we cannot force them to offer up their secrets. And we’re about to compound the challenge further. For example, AlphaGo and its successors are designing their own core logics. So increasingly, human intelligence is no longer crafting the algorithmic tools by which decisions are reached.

Automation technologies – machine learning, advanced robotic sensors, AI, the growing “internet of things” – constitute a coherent set of techniques and technical powers for an increasingly post-human economy in which labour is gradually made more superfluous to production. At the same time, discussions about the potential impact of automation are often breathless and overstate, at least in the short term, the likely implications, particularly for employment. Could you take us through how you think automation is likely to reshape our economy and society?

You and I have just gone to buy coffee from a relatively upmarket coffee place. And if you remember, in that transaction, there was a device with a customer-facing camera mounted at the point of sale. I would wager, based on my experience, that this has been installed to eventually do away with the need for a card or payment device of any kind. It’s attempting to use facial recognition as our payment credential. That’s why there’s a camera there. I would be very surprised if it’s for any reason but that.

What was fascinating to me about that interaction was that the person who rang up our transaction didn’t know or care what that device was, The nature of her employment compels her, like any employee in a similar position, to accept whatever proposition the vendor of that technology has made to the employer.

This has always been the case since the beginning of Taylorist and Fordist labour operations, of course, which break what might have been a skilled craft job up into little deskilled modules that can be repeated. Workers can be trained up easily, and their performance can more readily be policed, measured and monitored. But I find that this is even more so in the contemporary workplace, and not only on the shop floor but in the preserves of what used to be thought of as more creative, intellectual or managerial labour.

It leads to a kind of learned helplessness, in which people simply do accede to whatever technology appears on the scene – we saw this just now in the café. The cashier was like, “Oh. Well, it’s just whatever the latest thing is. This is just how we’re going to do things now.”

There are two things going on, both of which are equally scary to me. The first is that people just aren’t curious about what this machine in front of them is doing, and above all why it’s been asked to do that. The other thing is that even if they do know, they’re just like, “Well, I don’t have the power to change that.” And on that assessment, given the politics of solidarity in the contemporary working environment, they’re probably correct.

If labour is imperilled, both because of emerging technologies but also because of political, economic and legal changes over the past 30 to 40 years, is that why you engage with the “fully automated luxury communism” movement in the book? You take issue with the argument that it is an ethical imperative to move to a world in which much of the labour world is basically superfluous to needs, given the power imbalances laced through our economy.

We’re prepared psychically or culturally for a world of full leisure. I don’t think we understand what a planet of nine billion people with a whole lot of time on their hands looks like. It is always possible that I’m generalising from my own idiosyncratic experience, but I need a project. I need to feel like I’m producing something useful for other people, or else I simply don’t feel very good about myself.

I’m certainly not arguing in favour of bullshit jobs or busy work. But there is a naiveté in the articulation of what’s been called “fully automated luxury communism”.

I don’t think the folks responsible for developing this line of argument have really quite reckoned with what it’s like when each one of us can have anything we want whenever we want it. I don’t necessarily know if that’s good for human psyches.

The series of radical technologies you talk about may one day profoundly change not just the relationship between employment and the production of value, but the nature of a commodity and how we conceive of scarcity. Are we prepared for the foundations of society being changed in this way? If not, how do we prepare politically?

Scarcity is more deeply ingrained in us than we understand. I’m not a nutritionist, for example, but I take it as given that the obesity problem so many societies are contending with largely results from the massive availability of calories beyond anything in the organic history of our species.

Many of these technologies are ultimately rooted in the capture, colonisation and monetisation of data that we create via use of the platform or the technology. How can we think differently about the governance and ownership of both data and data infrastructure? Are there different ways of conceiving of the infrastructures that sit behind these vast engines of capital accumulation?

Yes, there are, absolutely there are. The trouble is that so many questions that appear to be purely technical in nature present us with vexingly complicated implications socially or psychologically. I’ll give you an example. For many years, I was passionately involved in a movement calling for open municipal data. That to me seems so clearly preferable to the existing model where the data was held close, was restrictively licensed, and was provided only to the large corporate vendors who happened to be the municipality’s partners. I thought that it would be better off, given that each of us generated that information in the first place, that we could have access to it and make such use of it as we would.

And then Gamergate [the video game online protest that was accused of being a hate campaign] happened. One of the things we saw in Gamergate, which should have been obvious in retrospect, was how trivially easy open data can be weaponised by people who do not feel themselves bound by the same social contract as the rest of us. If neither the restrictive release of data to favoured partners nor its open availability produces desirable social outcomes, what’s left?

Here, ironically perhaps, is where another use case for the blockchain arises. Maybe access to municipal data is free of charge, but individual requests for data are logged in a distributed ledger so anybody can see who’s asked for what, and what they’ve done with it. But that feels a lot like a technical fix for a social problem.

I want to turn to the conclusion of your book. You say that there’s no unitary future waiting for us, that we shouldn’t just await our technological liberation but actually politically organise, demand it, and create institutions for that outcome. What are the key lessons we should take from your book?

I would break it up into two parts. The first is to always be self-critical about what we mean when we talk about using some technology “for good,” what we define as broad human benefit. Benefit for whom, and at what cost? We must be clear about that, and make arguments that indicate we understand there is a cost to our choices, and that that cost generally has to be borne by someone or -ones. To make robust arguments that we think that the collective outcome will be improved to a degree by our actions that justifies the costs that are borne. That’s the first thing.

Secondly. I find the calls that everyone should learn to code quite fatuous, but I do think that the left needs to be better at not surrendering the terrain of engineering and emergent technology. It’s almost a question of neurocognitive inclination. I will speak for those of us who got into the humanities. We were perhaps spurred toward the humanities by a disinclination to deal with numbers, or structured facts, in quite the same way that “the hard sciences” do, or engineering does, or applied science. But this is something we’re going to have to overcome, because otherwise the only people who are going to develop that affinity into practice are the people who are immersed in the politics of the domains in which those practices are articulated. And broadly speaking, they split into authoritarian and techno-libertarian lobes. Those are just the rules of the game right now.

I think that we need to be braver about understanding code. Understanding what an API is, how it works – understanding network topographies, and what the implications of network topographies are for the things which flow across and between their nodes. Understanding corporate governance. Understanding all these things that so many of us who think of ourselves as being on the left prefer not to address. We need badly to develop expertise in these things so that we can contest them, because otherwise it’s black boxes all the way down. We don’t know what these technologies do or how they work. We just don’t see what politics they serve.

There are what we call in the business “weak signals” all across the horizon. There are emergent groups and conversations that are happening, places and scenes in which people with progressive politics are developing the kind of facility with networked digital information technologies I think is so necessary. But they’re all green shoots, and they all need to be nurtured. For the rest of my life, that’s part of what I see my role as being. Helping to help these conversations identify themselves to one another so that they can join and develop greater rigour and greater purchase on the disposition of resources in the world.

Mathew Lawrence is senior research fellow at the Institute for Public Policy Research. This is an edited version; the full verbatim interview appears in IPPR’s Progressive Review

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments