Bumble launches new feature that automatically blurs nude images

'The safety of our users is our number one priority'

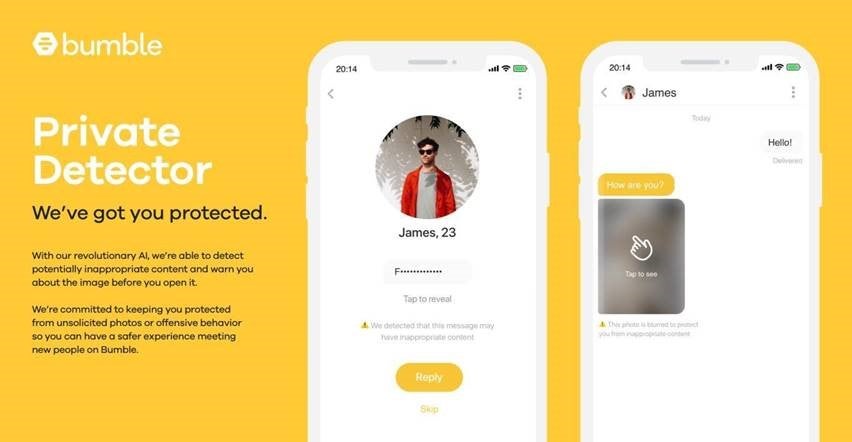

Dating app Bumble has launched a new feature that automatically blurs nude images.

Earlier this year, the female-forward app, which encourages women to make the first move when they match with men, announced the development of its Private Detector Tool.

By utilising artificial intelligence, the tool captures images in real time with 98 per cent accuracy in order to detect if a user has been sent content that is inappropriate.

The feature, which has now been rolled out across the globe and was announced on Bumble's Instagram account, was championed by Whitney Wolfe Herd, founder and CEO of Bumble, and Andrey Andreev, founder of MagicLab, which owns dating apps Badoo, Bumble, Lumen and Chappy.

Texas-born Wolfe Herd recently worked with lawmakers in the US state to make the sharing of unsolicited lewd photographs a punishable crime, legislation that came into effect in August.

When a Bumble user is sent an image or message that the Private Detector tool has deemed inappropriate, a message appears on their screen to alert them.

They are then offered the option to tap to reveal the picture or message in question.

“The digital world can be a very unsafe place overrun with lewd, hateful and inappropriate behaviour,” said Wolfe Herd.

“There’s limited accountability, making it difficult to deter people from engaging in poor behaviour.”

Andreev added that the safety of people who use dating apps is the company’s “number one priority”.

“The development of ‘Private Detector’ is another undeniable example of that commitment,” he stated.

Several Instagram users praised the rolling out of the new feature.

“Someone tried sending me a nude and I was SO happy when this popped up,” one person wrote, in reference to the Private Detector tool. “Guys – send dog pictures, not d*** pictures.”

Another person commented: “Finally.”

Cyber flashing, when a person is sent a sexually explicit image or message without their consent via digital means, has been illegal in Scotland since 2010.

However, it is not a criminal offence in England or Wales.

Earlier this year, the UK Ministry of Justice announced that a public consultation will be held to determine whether laws regarding cyber flashing and other sexual offences will be strengthened in future after campaigners called for changes to be made.

For all the latest news on dating, love and relationships, click here.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks