The cutting edge of prosthetics: How researchers are trying to give back the ability to feel

The sense of touch is crucial to human experience, and yet neuroscientists still have little idea how it actually works. Alexandra Ossola reports.

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

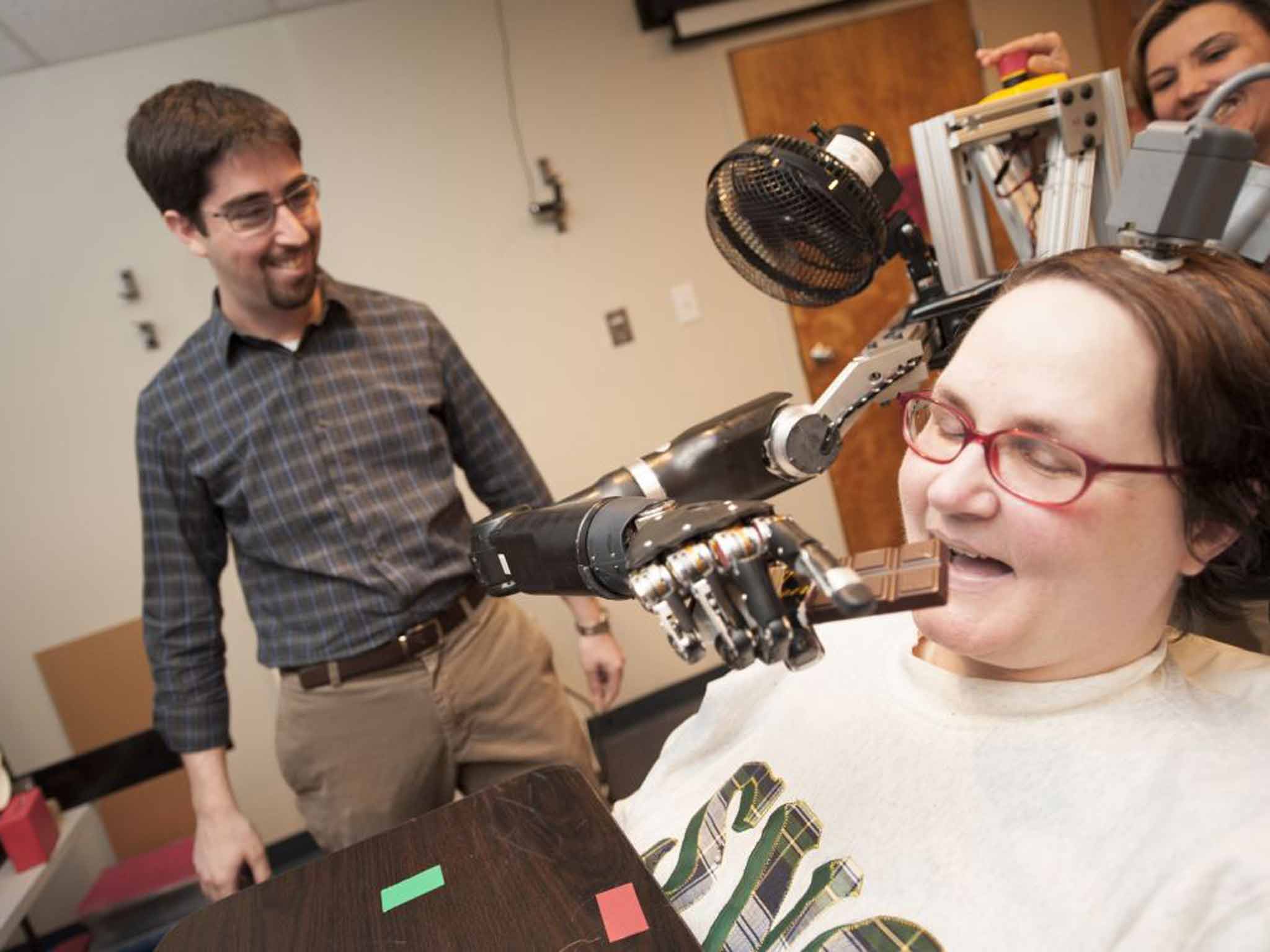

Your support makes all the difference.In 2012, Jan Scheuermann ate the most satisfying bite of chocolate of her life. A decade earlier, Scheuermann was diagnosed with spinocerebellar degeneration, a progressive disease that confined her to a wheelchair, paralysed from the neck down. At age 53, she volunteered for a study through the University of Pittsburgh that would allow her to operate a prosthetic arm using only her mind. She underwent surgery to have two nodes implanted on the surface of her brain, which would read the neurons' activity when she thought about moving her shoulder and her wrist.

After a few weeks of retraining her brain, the researchers hooked up some wires that linked the nodes on the top of her head to a prosthetic arm that sat on a cart beside her. She practised grabbing objects and moving them with different turns of the prosthetic wrist. Then researchers decided she was ready to go bigger.

"When they asked me for a goal, I was being facetious and going for a laugh when I replied that I would like to feed myself chocolate," she says. "Instead of laughing, the scientists looked at each other and nodded and said, 'Yeah, we should be able to do that'." After a few weeks of training, she put the chocolate in her mouth, then whisked the prosthetic arm away quickly, closed her eyes and enjoyed the bite. Scheuermann was moving objects for the first time in a decade – a real science and technology miracle. But there was still one thing missing: the prosthetic didn't give her back the ability to feel.

Mind-operated prosthetics, or neuroprosthetics, are at the cusp of how neuroscientists can manipulate our sense of touch. But giving Scheuermann the ability to feed herself is only part of the challenge – researchers are also trying to bring sensory information back to the user. "Right now, the feedback is the vanguard; it's much further behind than the motor [movement] part," says Daniel Goldreich, a professor of neuroscience at McMaster University in Ontario. Decades of research have given scientists a good understanding of the pathways that transmit input from the outside world to our brains – as long as we smell, hear or taste that information. But not if it comes through touch.

"I think touch is one of the most poorly studied senses," says Alison Barth, a professor of biology at Carnegie Mellon University in Pittsburgh. Though touch can have a lot of emotional significance, she says, it tends to be less important than sight and sound in terms of day-to-day communication, which is why it may not have been studied as thoroughly in the past. Plus, our sense of touch is always on, so we tend to take it for granted.

Here's what we do know about how somatosensory signals, or "touch information", reach the brain: when I reach out to pick up a glass of water, the nerves that start in my palm and fingertips transmit this specific sensory information as electrical signals up the long chains of nerve cells, one-fifth the width of a hair but several feet long, through my arm to my spinal cord. From there, the signal is transmitted to the brain, where it's decoded to create a complete picture of what I'm touching. Each specific attribute, like temperature, texture, shape or hardness, has its own nerve pathway in this transmission process from fingertip to brain.

"You have all these different flavours of touch receptors that are all mixed together like multicoloured jellybeans, but the information is totally discrete – you don't confuse cold with pressure," Barth says. She estimates that the skin is laced with around 20 different types of tactile nerves for all these different sensations. But researchers aren't sure how our brain gets such a clear picture of an object such as that water glass.

"We don't know how the brain decodes this pattern of electrical impulses," says Goldreich. That's true for the sense of touch, he says, and also for how all of our senses combine; when I see an iPhone sitting on a desk, I can imagine what it would be like to hold in my hand, what the "unlock" sound is like, how it feels to hold it up to the side of my face. Though decades of research have helped scientists figure out the parts of the brain involved, they haven't been able to quite nail down how the information is compiled.

Barth's team recently published a paper in the research journal Cell, looking more closely at the neurons in a particularly deep part of the brain called the thalamus, which we know plays a key role in transmitting sensory signals. They wanted to zero in on the exact neurons involved in a particular sensation, so they stimulated a mouse's whiskers, which are so sensitive that they are thought to be analogous to human fingers. To see the signal in the thalamus, Barth and her team mutated a gene that makes neurons fluoresce when they fire frequently, and cut a literal window in the mouse's skull so they could watch the brain cells light up.

When the researchers blew puffs of air at just one whisker, they saw neurons light up in two different parts of the thalamus before relaying their signal up to the cortex, the part of the brain responsible for memory and conscious thought. When they stimulated several whiskers at once, the signal was the same for the neurons in one part of the thalamus but different in another. To Barth, that meant information from the same part of the body – in this case, the whiskers – was transmitted differently to the cortex, depending on if one or many were stimulated. This hinted at the "neural underpinnings" of how touch works in the thalamus. Though the researchers couldn't see all of it, there was clearly a pattern to how the neurons activated, illuminating a subsystem of neural pathways that researchers had never before detected.

Work like Barth's is bringing neuroscientists closer to understanding touch in the brain, which has many implications. Researchers predict a dramatic change in the design of smartphones, for example. Phones already give you some somatosensory feedback, like vibrating when you receive a text or buzzing when you press a button. But phones could do a lot more to tap into our sense of touch. "The thing I hate about my smartphone is I can't do anything without looking at it," Barth says. She anticipates that phone interfaces could change textures over important buttons to show users where to click, which could also help the blind use touchscreens.

Goldreich predicts that screens will be able to go even further; by manipulating the electrical input, scientists could make video calls a multi-sensory experience, where touching the computer screen feels like touching the person's face or hand. "That would require us to understand and come up with ways to activate those sensors in your hand, but we're actually pretty close to doing that," Goldreich says.

Researchers have found other, more holistic ways to help the blind and the deaf through increasingly sophisticated sensory substitution systems. One system, called VEST, picks up sounds with a microphone and translates them into patterns of vibrations on the skin. Over time, the brain learns to interpret this tactile information as auditory signals; eventually, it enables the deaf to understand auditory cues. Others are doing similar work for the blind, turning a camera feed into pixels that vibrate the skin in particular ways and effectively make it possible for a user to "see".

"The dream of that sort of research is to try to give someone vision through another sensory system," Goldreich says. It's a world that may soon become a reality: Some of these applications, like textured touchscreens and sensory substitution devices, might become available in the next decade or two, he says.

And, of course, one of the most important applications of an improved science of touch is the potential for better neuroprosthetics. Andrew Schwartz, a professor of neurobiology at the University of Pittsburgh, leads a team that recently designed a prosthetic arm that can bring very basic sensory feedback to its user. Its hand has sensors that generate small jolts of electricity to stimulate the same neural pathways that would activate with input from a typical human hand. But because neuroscientists are still figuring out just how much electricity the nerves send to the brain for different sensations, the design's feedback is still "crude" at best.

"We don't understand the code or the level for how crude electrical activation gets changed into neural activation," Schwartz says. Without that understanding, the feedback will continue to be rudimentary, unable to incorporate the constantly changing information of a moving hand. But little by little, the feedback is becoming more sophisticated; thanks to research like Barth's, Schwartz and his team have a better understanding of which neurons in particular respond to a stimulus and when they start responding, which could help them mathematically compute just how much electricity the nerves bring to the brain.

Developing more sophisticated devices could also help researchers better understand how our sensory experiences translate into our overall wellbeing. Though all of our senses have deep ties to our emotional selves, touch is particularly powerful; for decades psychologists have highlighted the importance of human contact to babies' neurological development and adults' ability to communicate their emotions. Those who have lost it, like Scheuermann, can attest to how much touch matters. But researchers haven't really explored the potential of manipulating touch in order to improve problems. Barth says there is the possibility touch could be used to treat conditions like depression and anxiety. "Forms of touch like skin-to-skin contact might have enormous influence over our emotional wellbeing," she says. "We haven't taken advantage of that."

Scheuermann says she would be interested in a prosthetic device that gave her feedback – but only if it really delivered on touch's emotional component. She doesn't have much use for such a device if it only allowed her to "connect with an object", she says. But she would be happy to use it "if [the device] allowed me to feel the touch of someone touching my hand, if it let me feel not only that I was touching a person but also feel the warmth and texture of her hand".

© Newsweek

Life and limb: the past and future of prosthetics

In 2000, archaeologists in Egypt discovered a prosthetic big toe attached to the foot of a 3,000-year-old mummy. Researchers initially believed the leather sock with its wooden digit had been added to the nobleman's body as part of a burial ritual. For many ancient Egyptians, deformities were concealed even in the tomb as a sign of respect. But the appendage showed signs of wear and, in 2012, scientists at the University of Manchester published the results of an experiment using a replica and a toeless volunteer. Tests showed that the Egyptian toe had been a simple but highly effective and – for its day – hi-tech prosthesis, providing about 80 per cent of the flex and push-off power as the tester's intact, left toe.

The Cairo toe is the oldest known prosthesis. But for as long as man has had limbs and lost them, he has tried to replace them. And while today's most advanced prosthetics incorporate some of the highest tech anywhere, their development has been slow.

Armourers in the Dark Ages used iron to build new arms and legs for the soldiers whose limbs they could not protect. But they were often crude affairs. The first big breakthrough came in the 16th century when Ambroise Paré, a French military doctor, invented a hinged hand and legs with working knees. New materials such as carbon-fiber have transformed static false limbs, but only since prostheses have become smart have we begun to replicate the full ingenuity of our own bodies.

Last December, a team at John Hopkins University Applied Physics Laboratory in the US revealed a pair of full prosthetic arms that strap to the shoulders of Les Baugh, who lost both arms in an electrical accident 43 years ago. The unit includes sensors that translate the tiny movements of his chest muscles, initiated by signals that his brain still sends, into movement of the arms and hand. He can even "move" individual fingers.

Similar muscle-sensing technology powers a rival hand invented by Dean Kamen of Segway fame. His Luke Hand, named after fictional prosthesis user Luke Skywalker, is capable of picking up eggs and opening envelopes.

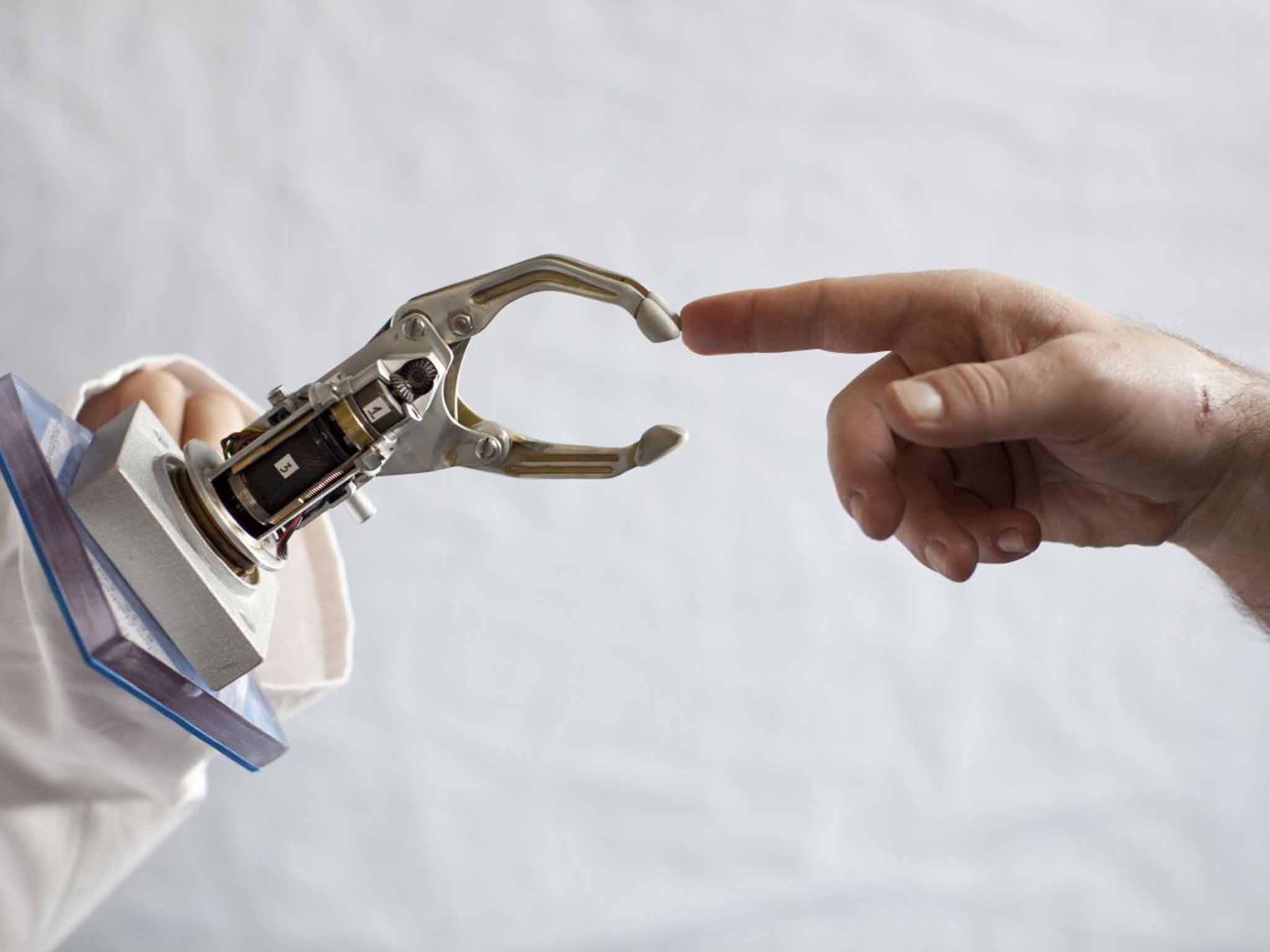

As the fluidity and mobility of these limbs keep advancing, a new front in the science of prostheses has emerged: touch. Several research teams around the world are developing ways to place artificial sensors on the "skin" of false limbs, to be wired into the body's nervous system, complementing the connection that has already been made between brain, muscle and limb. Last year, a Danish tester called Dennis Aabo Sørensen, who had lost an arm in a fireworks accident, handled different objects while blindfolded, in an experiment in Rome. "I could feel round things and soft things and hard things," he said. "It's so amazing to feel something that you haven't been able to feel for so many years."

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments