AI weapon development is a ‘moral imperative’, says US panel led by former Google boss

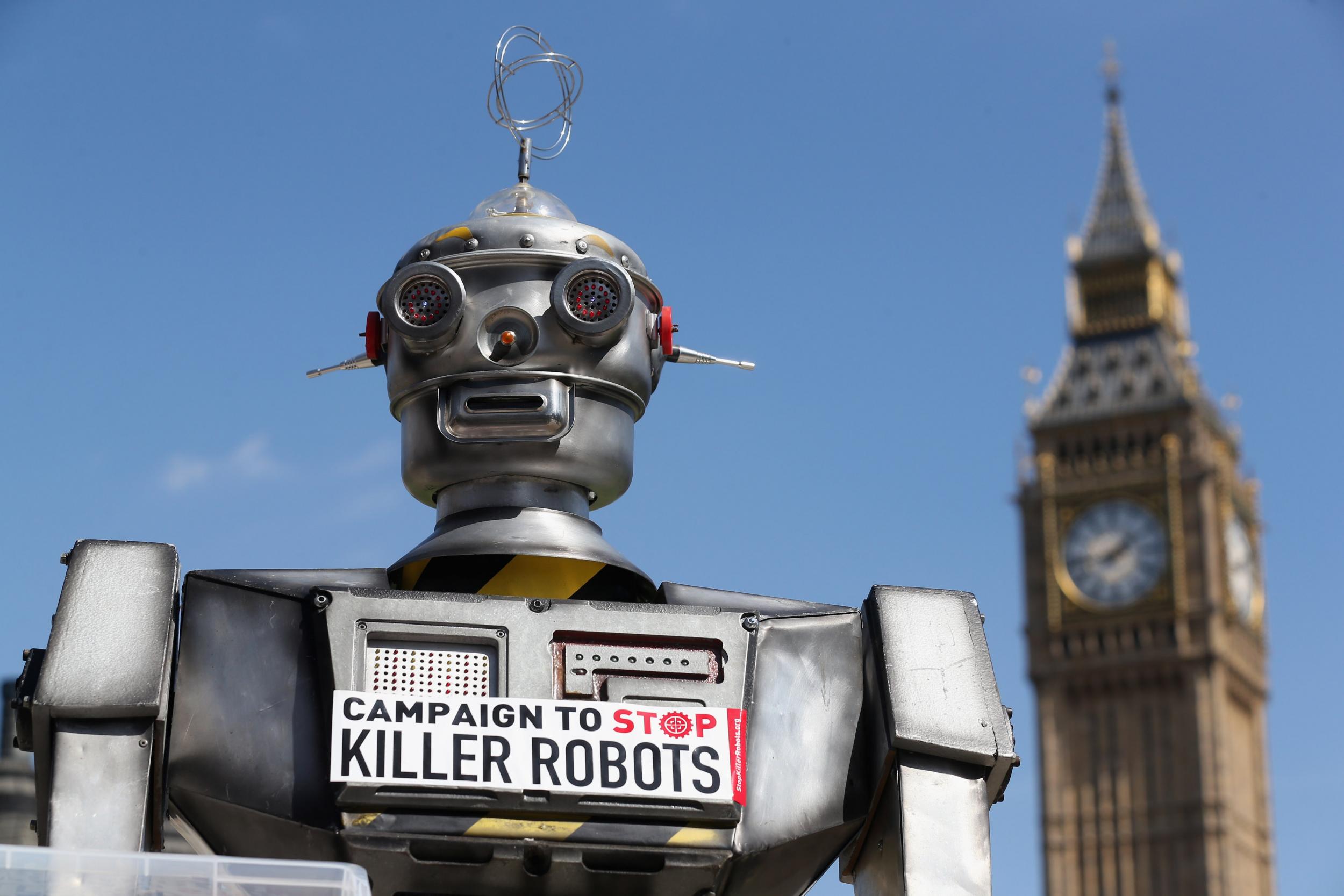

Dozens of countries have already called on a complete ban of ‘killer robots’

Your support helps us to tell the story

From reproductive rights to climate change to Big Tech, The Independent is on the ground when the story is developing. Whether it's investigating the financials of Elon Musk's pro-Trump PAC or producing our latest documentary, 'The A Word', which shines a light on the American women fighting for reproductive rights, we know how important it is to parse out the facts from the messaging.

At such a critical moment in US history, we need reporters on the ground. Your donation allows us to keep sending journalists to speak to both sides of the story.

The Independent is trusted by Americans across the entire political spectrum. And unlike many other quality news outlets, we choose not to lock Americans out of our reporting and analysis with paywalls. We believe quality journalism should be available to everyone, paid for by those who can afford it.

Your support makes all the difference.The US should continue to explore the development and use of AI-powered autonomous weapons despite calls for them to be banned, a commission led by former Google boss Eric Schmidt has concluded.

The government-appointed panel filed a draft report to Congress on Tuesday after two days of discussions centred on the use of artificial intelligence by the world’s biggest military power.

Robert Work, the panel’s vice chairman and a former deputy secretary of defence, said autonomous weapons are expected to make fewer mistakes than humans do in battle, leading to reduced casualties or skirmishes caused by target misidentification.

"It is a moral imperative to at least pursue this hypothesis," he said.

The discussion waded into a controversial frontier of human rights and warfare. For about eight years, a coalition of non-governmental organizations has pushed for a treaty banning "killer robots," saying human control is necessary to judge attacks' proportionality and assign blame for war crimes. Thirty countries including Brazil and Pakistan want a ban, according to the coalition's website, and a United Nations body has held meetings on the systems since at least 2014.

While autonomous weapon capabilities are decades old, concern has mounted with the development of AI to power such systems, along with research finding biases in AI and examples of the software's abuse.

The US panel, called the National Security Commission on Artificial Intelligence, in meetings this week acknowledged the risks of autonomous weapons. A member from Microsoft Corp for instance warned of pressure to build machines that react quickly, which could escalate conflicts.

The panel only wants humans to make decisions on launching nuclear warheads.

Still, the panel prefers anti-proliferation work to a treaty banning the systems, which it said would be against U.S. interests and difficult to enforce.

Mary Wareham, coordinator of the eight-year Campaign to Stop Killer Robots, said, the commission's "focus on the need to compete with similar investments made by China and Russia... only serves to encourage arms races."

Beyond AI-powered weapons, the panel's lengthy report recommended use of AI by intelligence agencies to streamline data gathering and review; $32 billion in annual federal funding for AI research; and new bodies including a digital corps modeled after the army's Medical Corps and a technology competitiveness council chaired by the U.S. vice president.

The commission is due to submit its final report to Congress in March, but the recommendations are not binding.

“We believe that Google should not be in the business of war,” the letter stated. “We cannot outsource the moral responsibility of our technologies to third parties.”

Additional reporting from agencies.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments